Review the details that are captured for each tracked asset in a factsheet associated with an AI use case. You can also print a report to share or archive the factsheet details.

What is captured in a factsheet?

From the point where you start tracking an asset in an AI use case, facts are collected in a factsheet for the asset. As the asset moves from one phase of the lifecycle to the next, the facts are added to the appropriate section.

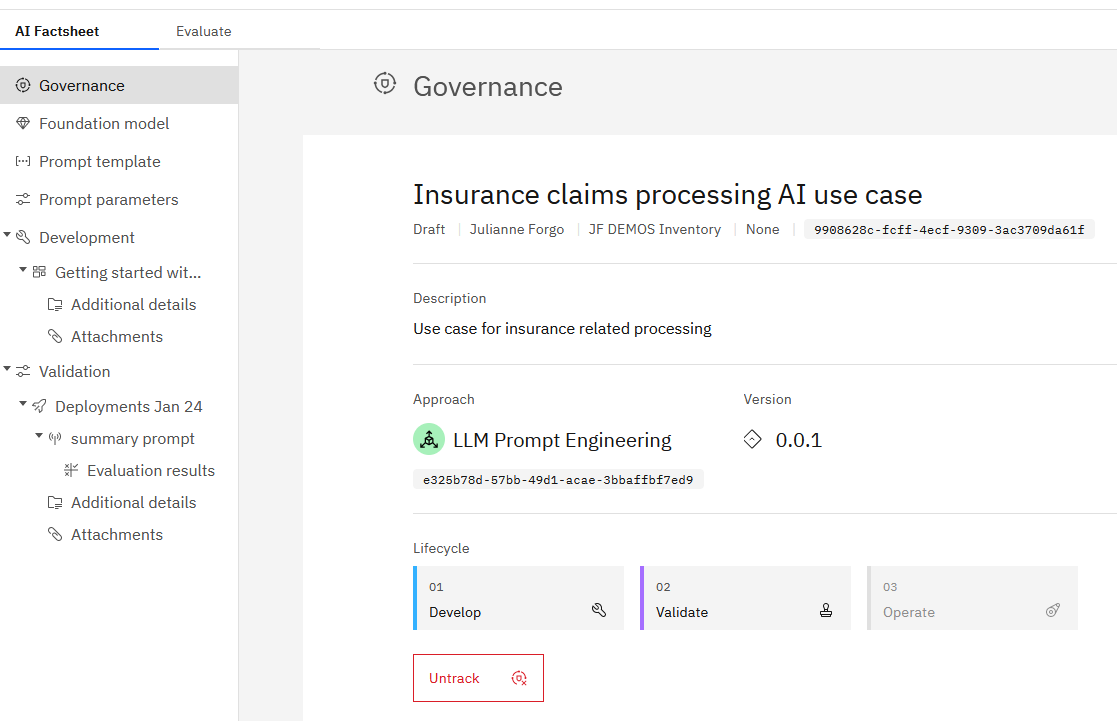

For example, a factsheet for a prompt template collects information for these categories:

| Category | Description |

|---|---|

| Governance | basic details for the governance, including the name of the use case, version number, and approach information |

| Foundation model | name and provider for the foundation model |

| Prompt template | prompt template name, description, input, and variables |

| Prompt parameters | options used to create the prompt template, such as decoding method |

| Evaluation | results of the most recent evaluation |

| Attachments | attached files and supporting documents |

The following image shows the factsheets for a prompt template being tracked in an AI use case.

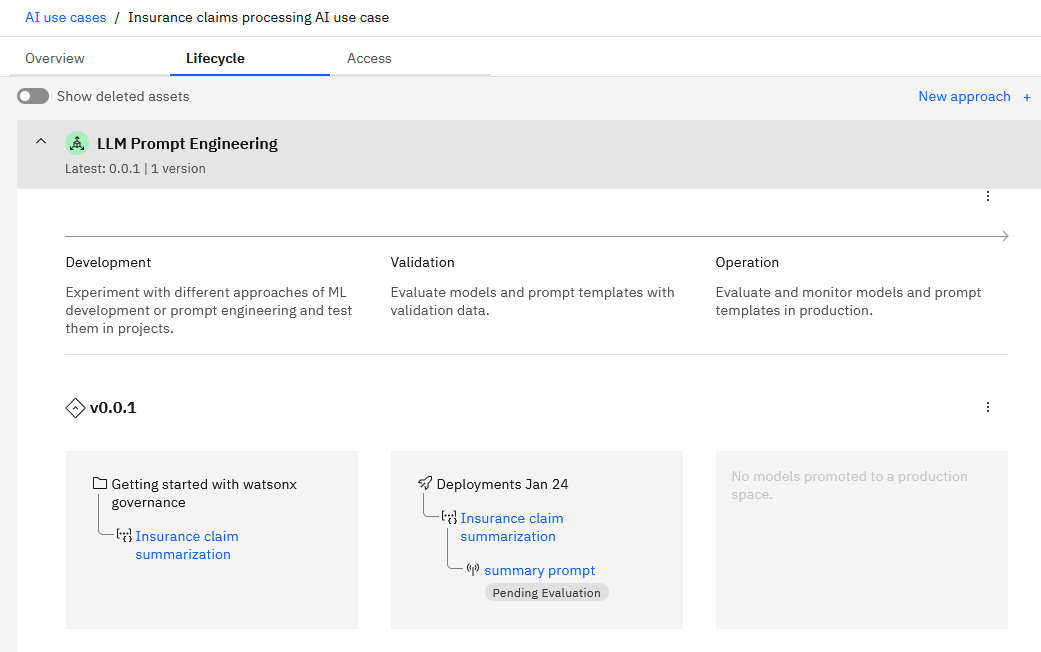

Tracking details

When tracking is enabled, the recording of facts is triggered by actions on the tracked asset. Similarly, changes in the AI lifecycle control where the asset displays in an AI use case, to show the progression from development to operation. For example, when an asset is saved in a project and tracking is enabled, the asset displays in the Develop phase. When an asset is evaluated, whether in a project or space, the use case is updated to show the asset and all evaluation data in the Validate phase. Deployments in production display in the Operate phase.

| Model location | Develop | Validate | Operate |

|---|---|---|---|

| Project | ✓ | ||

| Preproduction space | ✓ | ||

| Preproduction space | ✓ | ||

| Production space | ✓ |

| Prompt template location |

Develop | Validate | Operate |

|---|---|---|---|

| Development project | ✓ | ||

| Validation project | ✓ | ||

| Space | ✓ |

Viewing a factsheet for a prompt template

The following image shows the lifecycle information for the prompt template.

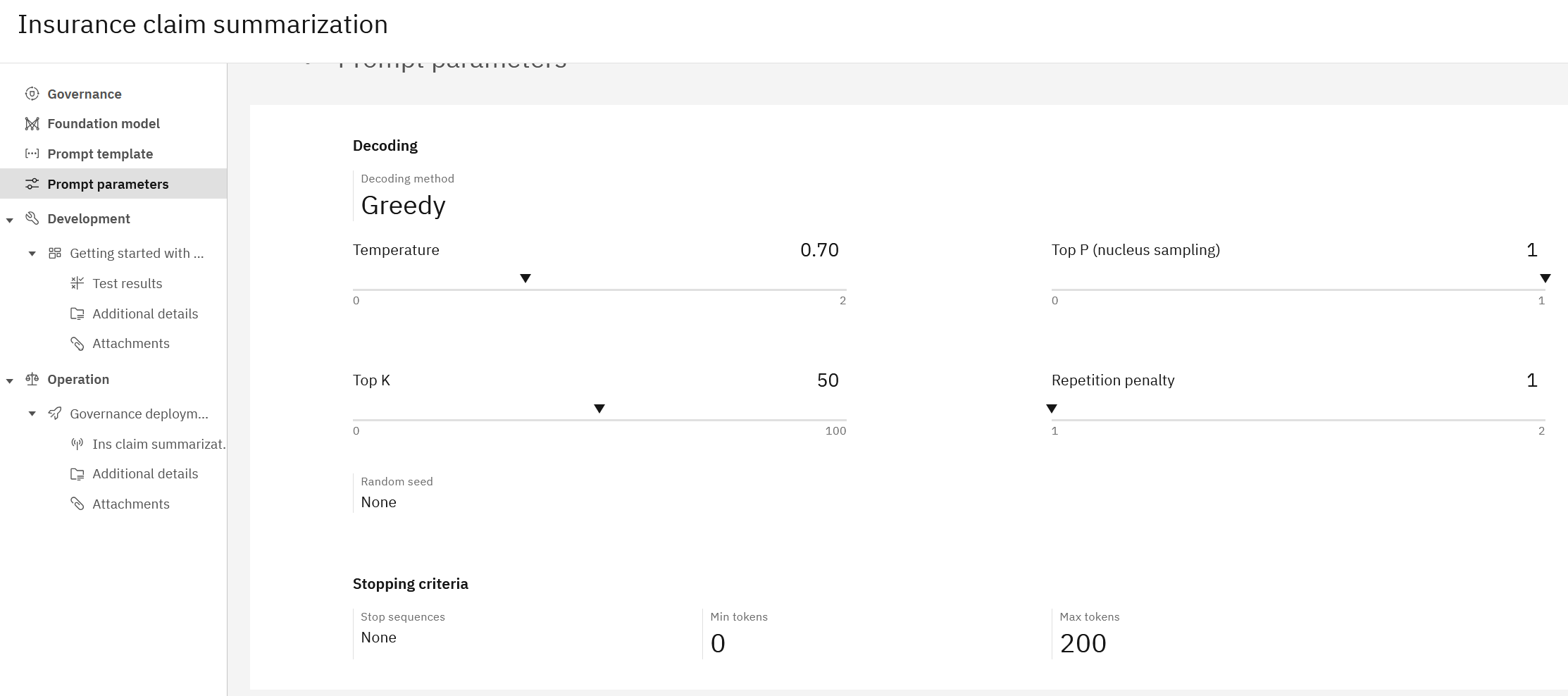

Click a category to view details for a particular aspect of a prompt template. For example, click Parameters to view details for the settings for the prompt template.

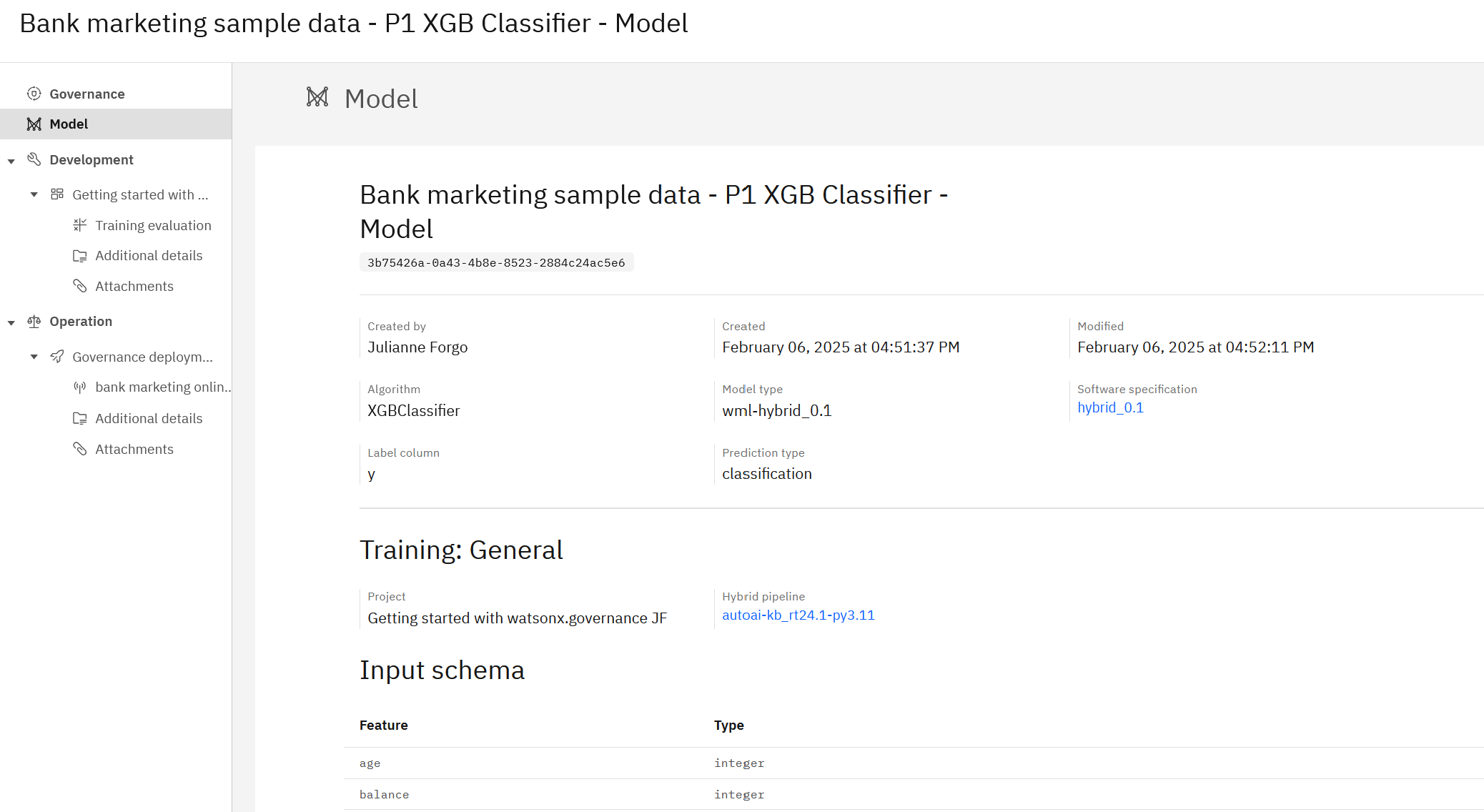

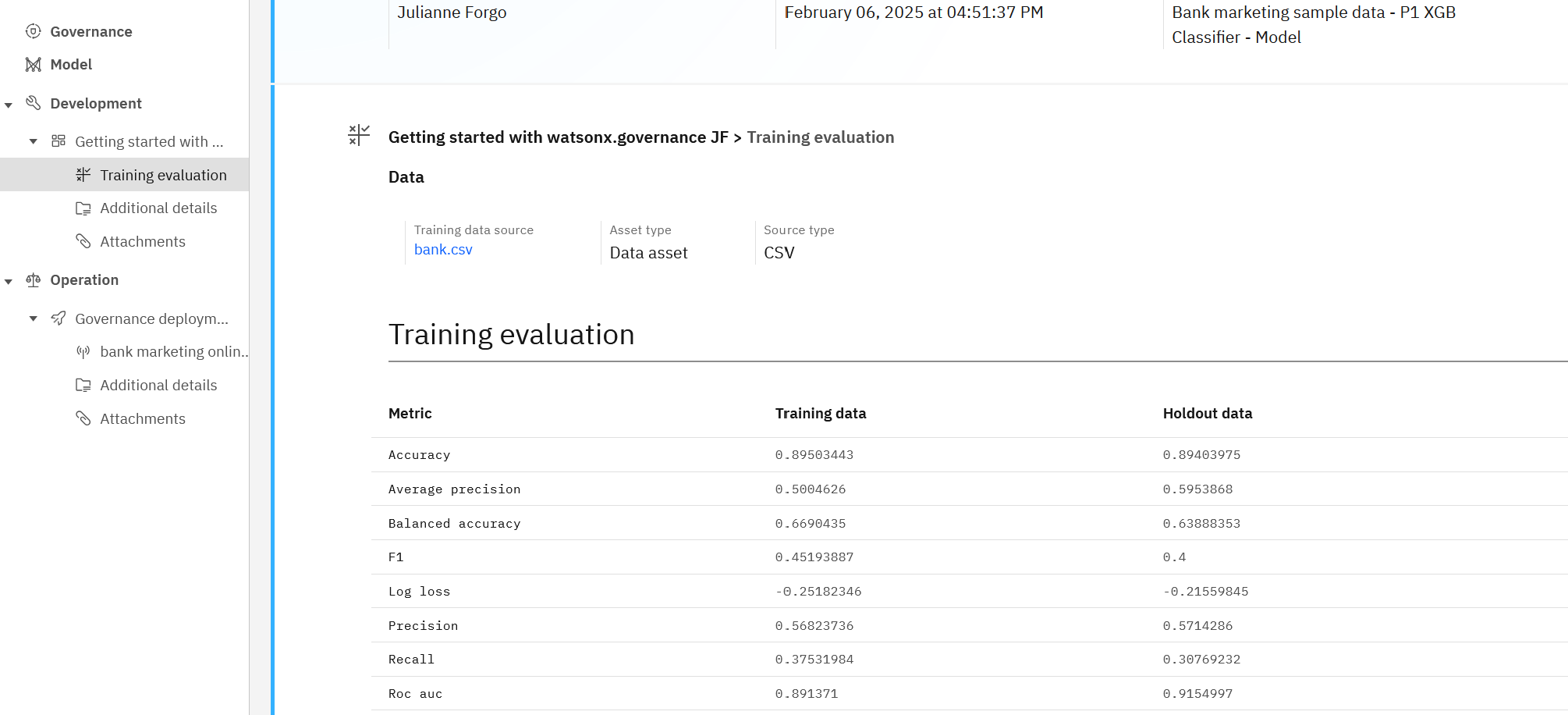

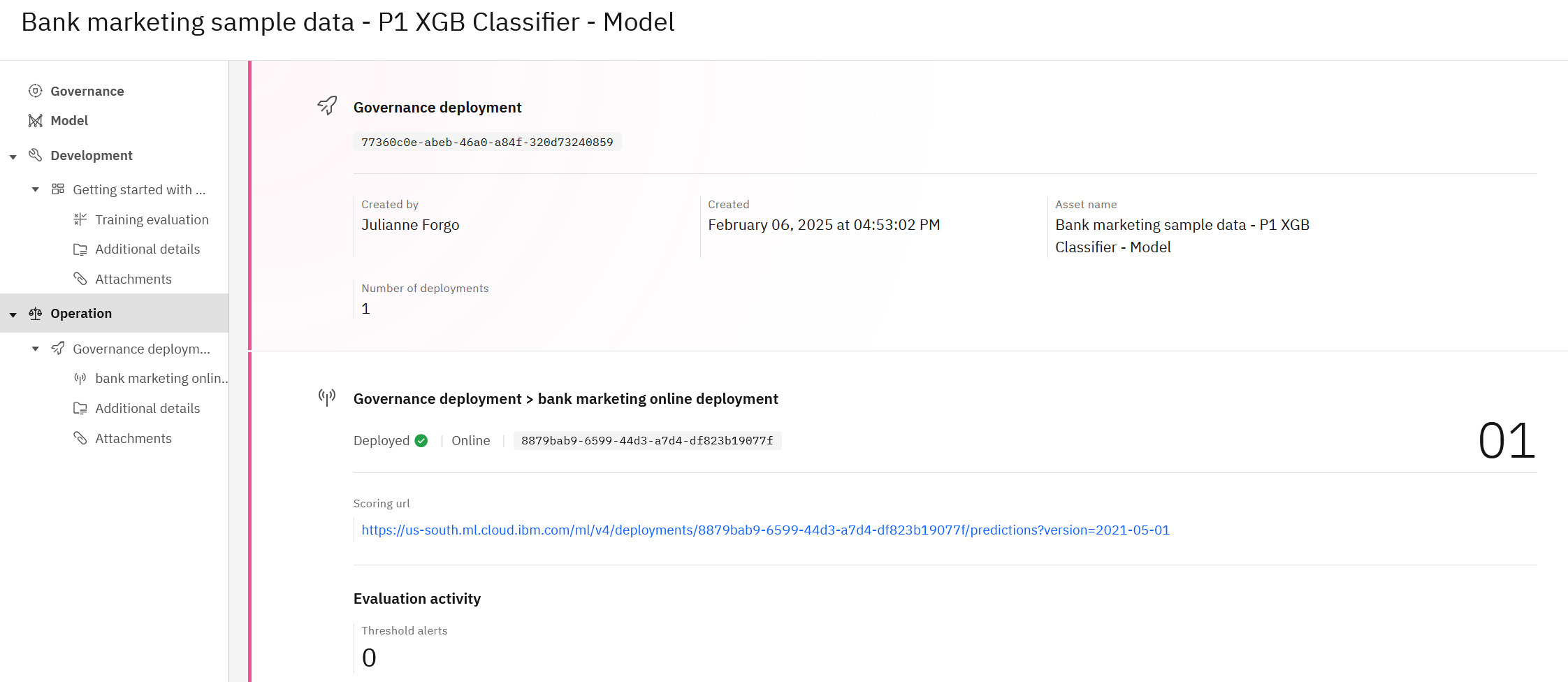

Viewing a factsheet for a machine learning model

The model factsheet displays all of the details for a machine learning model.

The details in the factsheet depend on the type of model. For example, this model trained by using AutoAI displays:

-

General model details such as name, model ID, type of model and software specification used. The input schema shows the details for the model structure.

-

Training information including the associated project, training data source, and hybrid pipeline name.

-

General deployment details, such as deployment name, deployment ID, and software specification used.

-

Evaluation details for your model, including:

- Payload logging and Feedback data information

- Quality metrics after evaluating the deployment

- Fairness metrics that test for bias in monitored groups

- Drift and drift v2 details following an evaluation

- Global explainability details

- Metrics information from custom monitors

- Model health details about scoring requests to online deployments, including payload size, records, scoring requests, throughput and latency, and users

If integration with IBM OpenPages is enabled, these facts are shared with OpenPages.

- Payload logging and Feedback data information

- Quality metrics after evaluating the deployment

- Fairness metrics that test for bias in monitored groups

- Drift details following an evaluation

- Global explainability details

- Metrics information from custom monitors

- Model health details about scoring requests to online deployments, including payload size, records, scoring requests, throughput and latency, and users

Next steps

Click Export report to save a report of the factsheet.

Parent topic: Governing assets in AI use cases