Follow these tips to resolve common problems you might encounter when working with watsonx.ai Runtime.

Troubleshooting watsonx.ai Runtime service instance

Troubleshooting AutoAI

- AutoAI inference notebook for a RAG experiment exceeds model limits

- Training an AutoAI experiment fails with service ID credentials

- Prediction request for AutoAI time-series model can time out with too many new observations

- Insufficient class members in training data for AutoAI experiment

- Unable to open assets from Cloud Pak for Data that require watsonx.ai

Troubleshooting deployments

-

Batch deployments that use large data volumes as input might fail

-

Deployments with constricted software specifications fail after an upgrade

-

Creating a job for an SPSS Modeler flow in a deployment space fails

-

Deploy-on-demand foundation models cannot be deployed to a deployment space

-

Deploying a custom foundation model from a deployment space fails

Troubleshooting watsonx.ai Runtime service instance

Follow these tips to resolve common problems you might encounter when working with watsonx.ai Runtime service instance.

Inactive watsonx.ai Runtime instance

Symptoms

After you try to submit an inference request to a foundation model by clicking the Generate button in the Prompt Lab, the following error message is displayed:

'code': 'no_associated_service_instance_error',

'message': 'WML instance {instance_id} status is not active, current status: Inactive'

Possible causes

The association between your watsonx.ai project and the related watsonx.ai Runtime service instance was lost.

Possible solutions

Recreate or refresh the association between your watsonx.ai project and the related watsonx.ai Runtime service instance. To do so, complete the following steps:

- From the main menu, expand Projects, and then click View all projects.

- Click your watsonx.ai project.

- From the Manage tab, click Services & integrations.

- If the the appropriate watsonx.ai Runtime service instance is listed, disassociate it temporarily by selecting the instance, and then clicking Remove. Confirm the removal.

- Click Associate service.

- Choose the appropriate watsonx.ai Runtime service instance from the list, and then click Associate.

Troubleshooting AutoAI

Follow these tips to resolve common problems you might encounter when working with AutoAI.

Running an AutoAI time series experiment with anomaly prediction fails

The feature to predict anomalies in the results of a time series experiment is no longer supported. Trying to run an existing experiment results in errors for missing runtime libraries. For example, you might see this error:

The selected environment seems to be invalid: Could not retrieve environment. CAMS error: Missing or invalid asset id

The behavior is expected as the runtimes for anomaly prediction are not supported. There is no workaround for this problem.

AutoAI inference notebook for a RAG experiment exceeds model limits

Sometimes when running an inference notebook generated for an AutoAI RAG experiment, you might get this error:

MissingValue: No "model_limits" provided. Reason: Model <model-nam> limits cannot be found in the model details.

The error indicates that the token limits for inferencing the foundation model used for the experiment are missing. To resolve the problem, find the function default_inference_function and replace get_max_input_tokens with the max tokens for the model. For example:

model = ModelInference(api_client=client, **params['model"])

# model_max_input_tokens = get+max_input_tokens(model=model, params=params)

model_max_input_tokens = 4096

You can find the max token value for the model in the table of supported foundation models available with watsonx.ai.

Training an AutoAI experiment fails with service ID credentials

If you are training an AutoAI experiment using the API key for the serviceID, training might fail with this error:

User specified in query parameters does not match user from token.

One way to resolve this issue is to run the experiment with your user credentials. If you want to run the experiment with credentials for the service, follow these steps to update the roles and policies for the service ID.

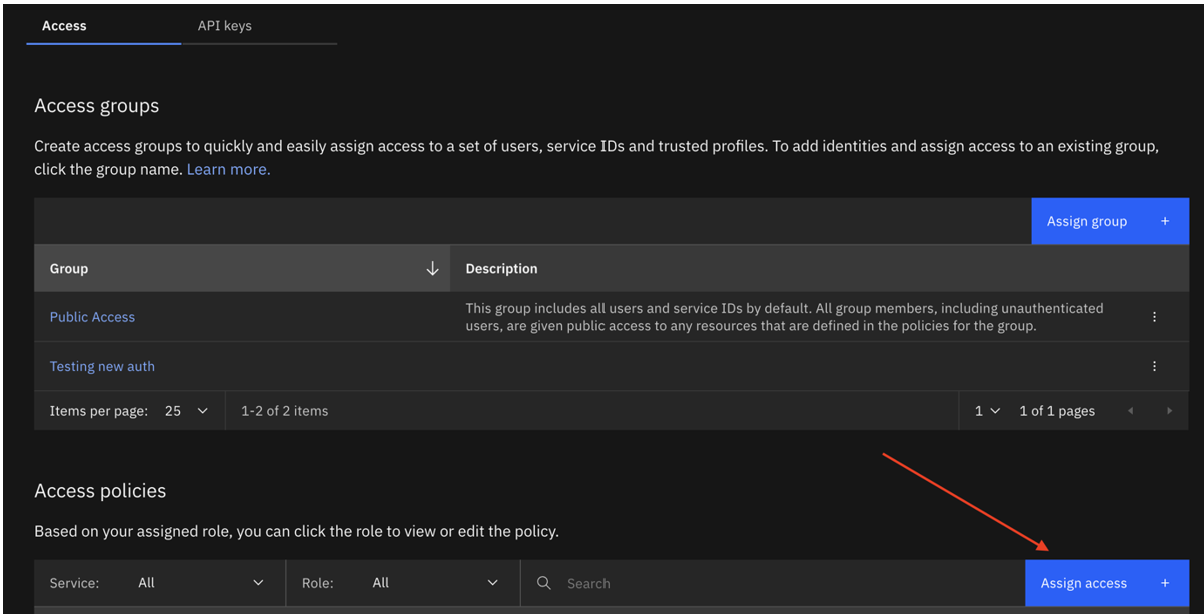

- Open your serviceID on IBM Cloud.

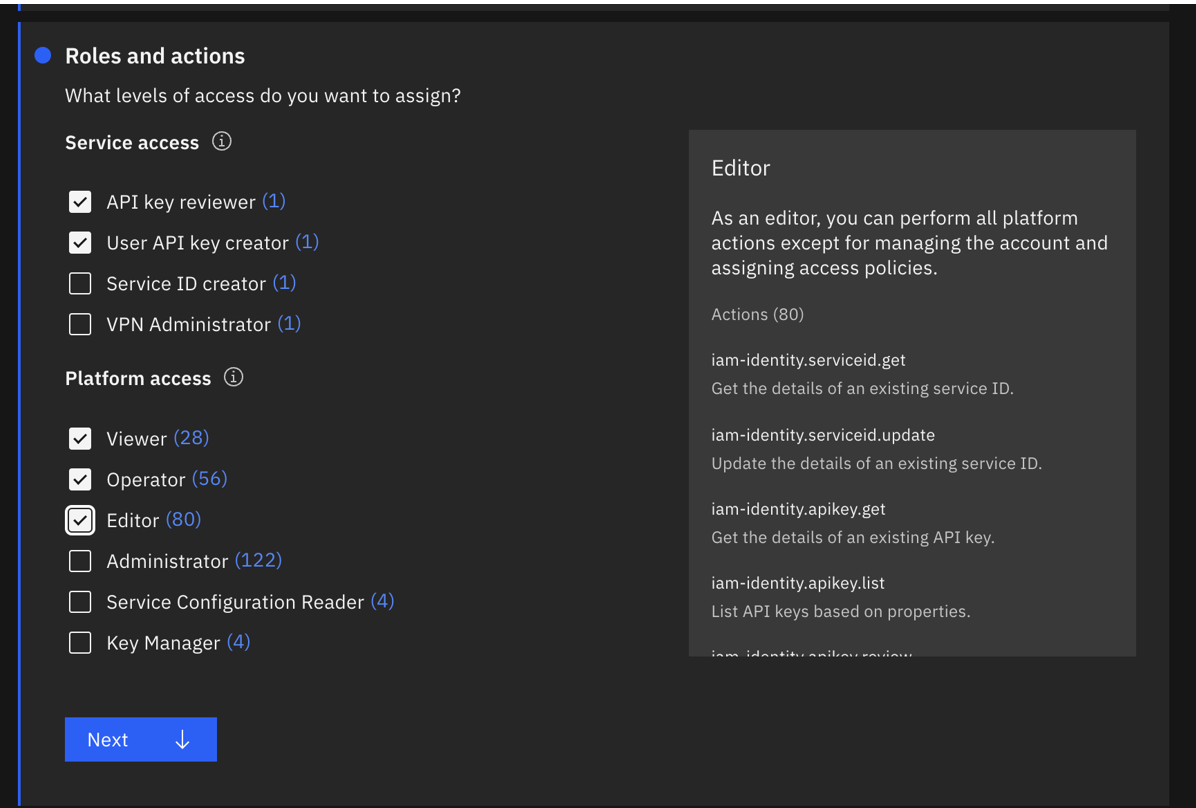

- Create a new serviceID or update the existing ID with the following access policy:

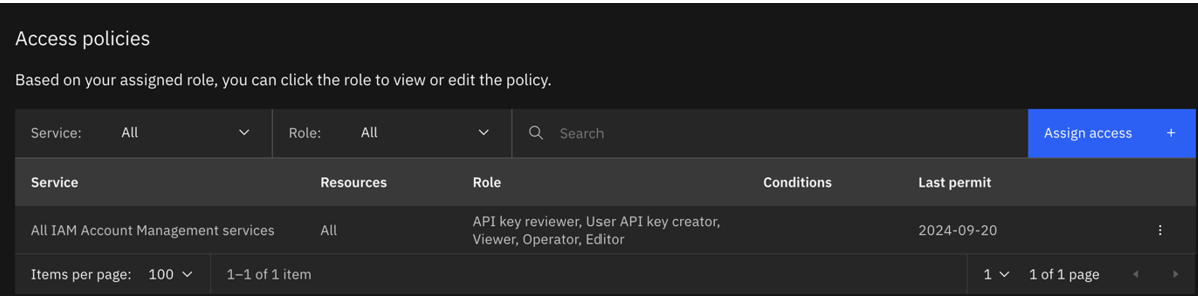

- All IAM Account Management services with the roles API key reviewer,User API key creator, Viewer, Operator, and Editor. Ideally it is best if they create a new apikey for this ServiceId.

- All IAM Account Management services with the roles API key reviewer,User API key creator, Viewer, Operator, and Editor. Ideally it is best if they create a new apikey for this ServiceId.

- The updated policy will look as follows:

- Run the training again with the credentials for the updated serviceID.

Prediction request for AutoAI time-series model can time out with too many new observations

A prediction request can time out for a deployed AutoAI time-series model if there are too many new observations passed. To resolve the problem, do one of the following:

- Reduce the number of new observations.

- Extend the training data used for the experiment by adding new observations. Then, re-run the AutoAI time-series experiment with the updated training data.

Insufficient class members in training data for AutoAI experiment

Training data for an AutoAI experiment must have at least 4 members for each class. If your training data has an insufficient number of members in a class, you will encounter this error:

ERROR: ingesting data Message id: AC10011E. Message: Each class must have at least 4 members. The following classes have too few members: ['T'].

To resolve the problem, update the training data to remove the class or add more members.

Unable to open assets from Cloud Pak for Data that require watsonx.ai

If you are working the in the Cloud Pak for Data context, you are unable to open assets that require a different product context, such as watsonx.ai. For example, if you create an AutoAI experiment for a RAG pattern using watsonx.ai, you cannot open that asset when you are in the Cloud Pak for Data context. In the case of AutoAI experiments, you can view the training type from the Asset list. You can open experiments with type machine learning, but not with type Retrieval-augmented generation.

Troubleshooting deployments

Follow these tips to resolve common problems you might encounter when working with watsonx.ai Runtime deployments.

Batch deployments that use large data volumes as input might fail

If you are scoring a batch job that uses large volumes of data as the input source, the job might fail becase of internal timeout settings. A symptom of this problem might be an error message similar to the following example:

Incorrect input data: Flight returned internal error, with message: CDICO9999E: Internal error occurred: Snowflake sQL logged error: JDBC driver internal error: Timeout waiting for the download of #chunk49(Total chunks: 186) retry=0.

If the timeout occurs when you score your batch deployment, you must configure the data source query level timeout limitation to handle long-running jobs.

Query-level timeout information for data sources is as follows:

| Data source | Query level time limitation | Default time limit | Modify default time limit |

|---|---|---|---|

| Apache Cassandra | Yes | 10 seconds | Set the read_timeout_in_ms and write_timeout_in_ms parameters in the Apache Cassandra configuration file or in the Apache Cassandra connection URL to change the default time limit. |

| Cloud Object Storage | No | N/A | N/A |

| Db2 | Yes | N/A | Set the QueryTimeout parameter to specify the amount of time (in seconds) that a client waits for a query execution to complete before a client attempts to cancel the execution and return control to the application. |

| Hive via Execution Engine for Hadoop | Yes | 60 minutes (3600 seconds) | Set the hive.session.query.timeout property in the connection URL to change the default time limit. |

| Microsoft SQL Server | Yes | 30 seconds | Set the QUERY_TIMEOUT server configuration option to change the default time limit. |

| MongoDB | Yes | 30 seconds | Set the maxTimeMS parameter in the query options to change the default time limit. |

| MySQL | Yes | 0 seconds (No default time limit) | Set the timeout property in the connection URL or in the JDBC driver properties to specify a time limit for your query. |

| Oracle | Yes | 30 seconds | Set the QUERY_TIMEOUT parameter in the Oracle JDBC driver to specify the maximum amount of time a query can run before it is automatically cancelled. |

| PostgreSQL | No | N/A | Set the queryTimeout property to specify the maximum amount of time that a query can run. The default value of the queryTimeout property is 0. |

| Snowflake | Yes | 6 hours | Set the queryTimeout parameter to change the default time limit. |

To avoid your batch deployments from failing, partition your data set or decrease its size.

Security for file uploads

Files you upload through the watsonx.ai Studio or watsonx.ai Runtime UI are not validated or scanned for potentially malicious content. It is recommended that you run security software, such as an anti-virus application, on all files before uploading to ensure the security of your content.

Deployments with constricted software specifications fail after an upgrade

If you upgrade to a more recent version of IBM Cloud Pak for Data and deploy an R Shiny application asset that was created by using constricted software specifications in FIPS mode, your deployment fails.

For example, deployments that use shiny-r3.6 and shiny-r4.2 software specifications fail after you upgrade from IBM Cloud Pak for Data version 4.7.0 to 4.8.4 or later. You might receive the error message Error 502 - Bad Gateway.

To prevent your deployment from failing, update the constricted specification for your deployed asset to use the latest software specification. For more information, see Managing outdated software specifications or frameworks. You can also delete your application deployment if you no longer need it.

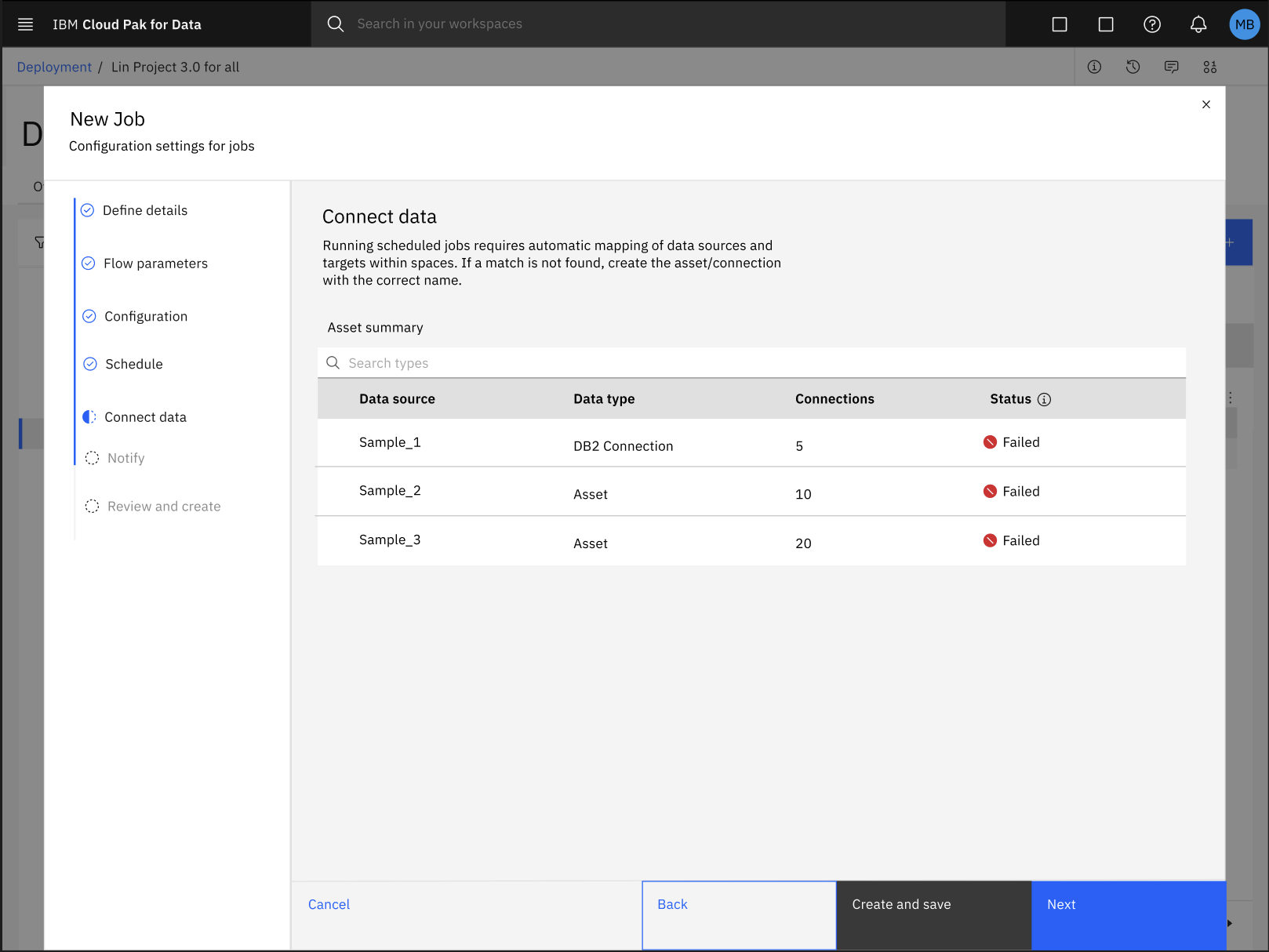

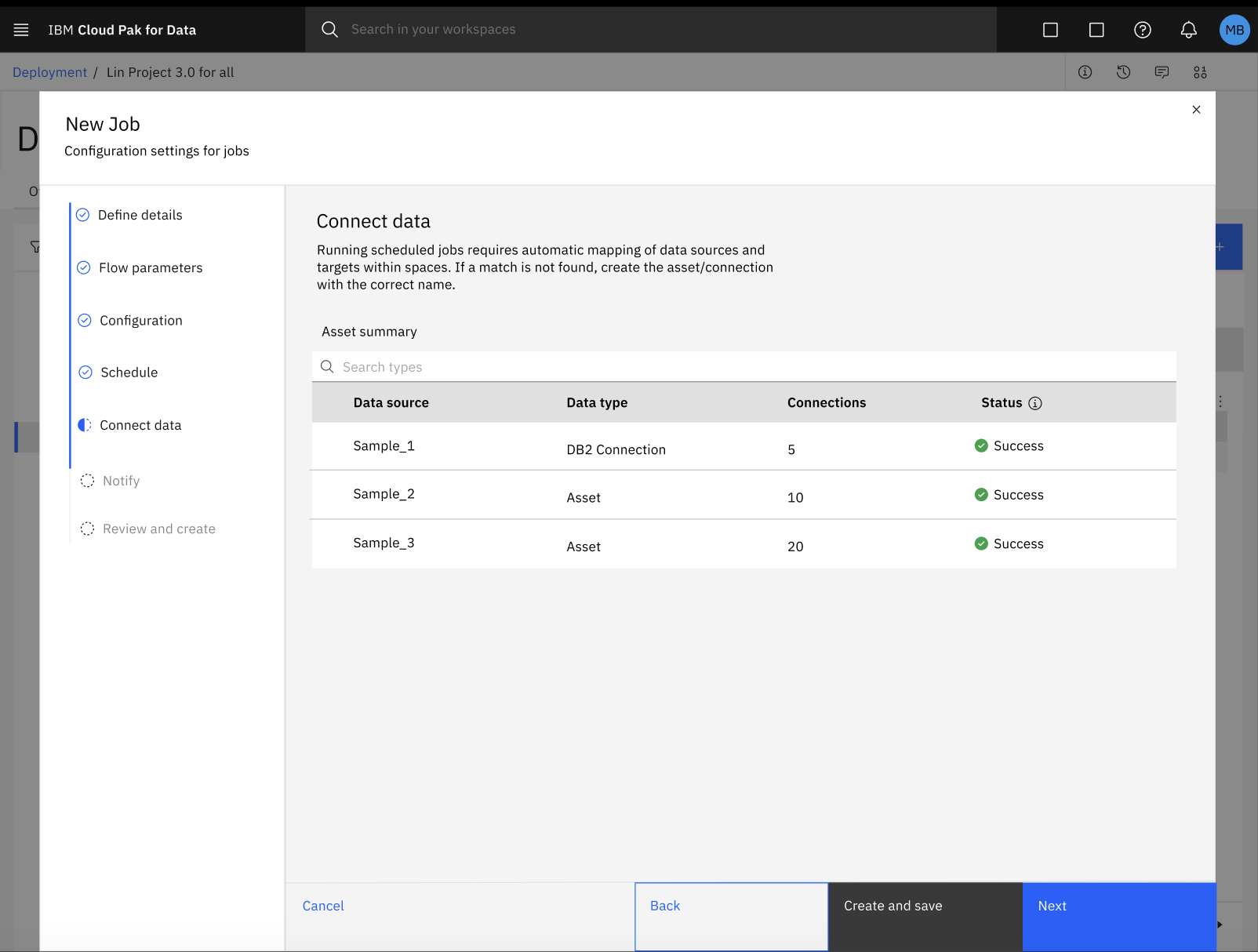

Creating a job for an SPSS Modeler flow in a deployment space fails

During the process of configuring a batch job for your SPSS Modeler flow in a deployment space, the automatic mapping of data assets with their respective connection might fail.

To fix the error with the automatic mapping of data assets and connections, follow these steps:

-

Click Create to save your progress and exit from the New job configuration dialog box.

-

In your deployment space, click the Jobs tab and select your SPSS Modeler flow job to review the details of your job.

-

In the job details page, click the Edit icon

to manually update the mapping of your data assets and connections.

to manually update the mapping of your data assets and connections. -

After updating the mapping of data assets and connection, you can resume with the process of configuring settings your job in the New job dialog box. For more information, see Creating deployment jobs for SPSS Modeler flows

Deploying a custom foundation model from a deployment space fails

When you create a deployment for a custom foundation model from your deployment space, your deployment might fail due to many reasons. Follow these tips to resolve common problems that you might encounter when you deploy your custom foundation models from a deployment space.

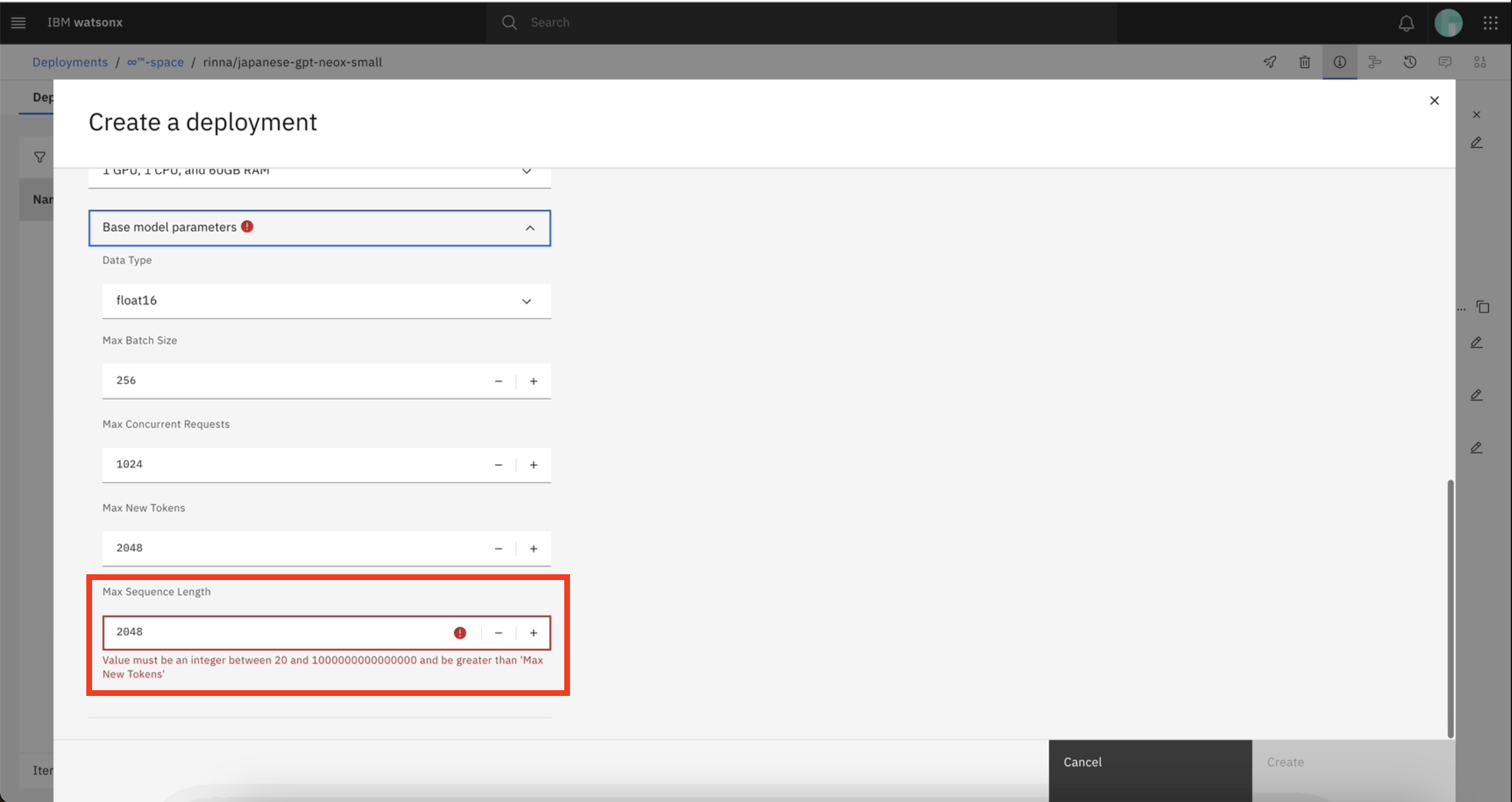

Case 1: Parameter value is out of range

When you create a deployment for a custom foundation model from your deployment space, you must make sure that your base model parameter values are within the specified range. For more information, see Properties and parameters for custom foundation models. If you enter a value that is beyond the specified range, you might encounter an error.

For example, the value of max_new_tokens parameter must be less than max_sequence_length. When you update the base model parameter values, if you enter a value for max_new_tokens greater than or equal

to the value of max_sequence_length (2048), you might encounter an error.

The following image shows an example error message: Value must be an integer between 20 and 1000000000000000 and be greater than 'Max New Tokens'.

If the default values for your model parameters result in an error, contact your administrator to modify the model's registry in the watsonxaiifm CR.

Case 2: Unsupported data type

You must make sure that you select a data type that is supported by your custom foundation model. When you update the base model parameter values, if you update the data type for your deployed model with an unsupported data type, your deployment might fail.

For example, the LLaMA-Pro-8B-Instruct-GPTQ model supports the float16 data type only. If you deploy the LLaMA-Pro-8B-Instruct-GPTQ model with float16 Enum, then update the Enum parameter from float16 to bfloat16, your deployment fails.

If the data type that you selected for your custom foundation model results in an error, you can override the data type for the custom foundation model during deployment creation or contact your administrator to modify the model's registry in the watsonxaiifm CR.

Case 3: Parameter value is too large

If you enter a very large value for the parameter max_sequence_length and max_new_token parameters, you might encounter an error. For example, if you set the value of max_sequence_length as 1000000000000000,

you encounter the following error message:

Failed to deploy the custom foundation model. The operation failed due to 'max_batch_weight (19596417433) not large enough for (prefill) max_sequence_length (1000000000000000)'. Retry the operation. Contact IBM support if the problem persists.

You must make sure that you enter a value for the parameter which is less than value defined in model configuration file (config.json).

Case 4: model.safetensors file is saved with unsupported libraries

If the model.safetensors file for your custom foundation model uses an unsupported data format in the metadata header, your deployment might fail.

For example, if you import the OccamRazor/mpt-7b-storywriter-4bit-128g custom foundation model from Hugging Face to your deployment space and create an online deployment, your deployment might fail. This is because the model.safetensors file for the OccamRazor/mpt-7b-storywriter-4bit-128g model is saved with the save_pretrained, which is an unsupported library. You might receive the following error message:

The operation failed due to 'NoneType' object has no attribute 'get'.

You must make sure that your custom foundation model is saved with the supported transformers library.

Case 5: Deployment of a Llama 3.1 model fails

In your Llama 3.1 model deployment fails, try editing the contents of your model's config.json file:

- Find the

eos_token_identry. - Change the value of the entry from an array to an integer.

Then try redeploying your model.

Deploy-on-demand foundation models cannot be deployed to a deployment space

You can deploy only one instance of a deploy-on-demand foundation model in a deployment space. If the selected model is already deployed, the deployment space in which the model is deployed is disabled.

If you need more resources for your model, you can add more copies of your deployed model asset by scaling the deployment.