A model risk management solution is offered with model evaluation that measures outcomes from AI models across their lifecycle and performs model validations to help your organization comply with standards and regulations.

Perform analysis with Watson OpenScale

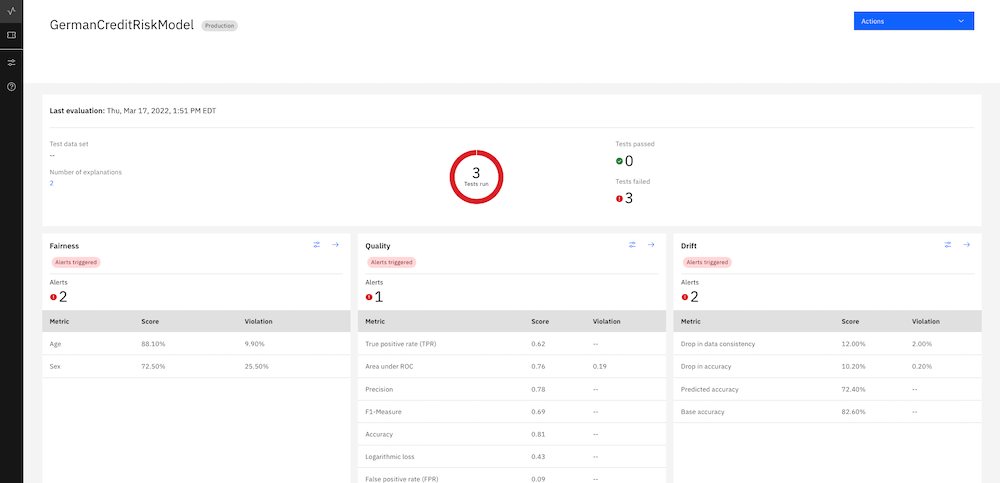

After you set up and activate the model risk management features, you can see and compare the sample evaluations. You can download the model summary report that includes all of the quality measures, fairness measures, and drift magnitude.

-

From the Insights dashboard, click the model deployment tile

-

From the Actions menu, click one of the following analysis options:

- All evaluations: Lists all of the in-progress and completed evaluations

- Compare: Compare any of the models, but especially versions of the same model, for best performance

- Download report PDF: Generates the model summary report, which gives you all of the metrics and the explanation for why they were scored the way they were

Deploy a new model to production in Watson OpenScale

Push the best model to production. Create a production record by importing from a pre-production model.

- Review the status of the model deployment.

- Return to the sample notebook and run the cells to send the model to production.

- You can now view the production model deployment tile. In a regular production environment, it initially appears empty until enough data is gathered and time elapses for metric calculation to be triggered. The notebook adds data and runs the monitors so that you can see the results immediately.

Compare models

When you view the details of a model evaluation, you can compare models with a matrix chart that highlights key metrics. Use this feature to determine which version of a model is the best one to send to production or which model might need work.

From the Actions menu, select Compare to generate a chart that compares the scores for the metrics that you use to monitor your models.

Evaluate Now

From the Actions menu, select Evaluate now to evaluate test data. In the pre-production environment, you can import test data with one of the following methods:

- Upload a CSV file that contains labeled test data

- Connect to a CSV file that contains labeled test data in Cloud Object Storage or Db2

When you use either of these import methods, you can control whether Watson OpenScale scores the test data.

If you want to score your data, import the labeled test data with feature and label columns. The test data is scored and the prediction and probability values are stored in the feedback table as the _original_prediction and _original_probability columns.

If you want to import test data that is already scored, select the Test data includes model output checkbox in the Import test data panel. You need to import the test data with feature and label columns along with

the scored output. Test data is not rescored. The prediction and probability values are stored in the feedback table as the _original_prediction and _original_probability columns.

Notes:

The test data that you upload can also include record_id/transaction_id and record_timestamp columns that are added to the payload logging and feedback tables when the Test data includes model output option is selected.

Make sure that the subscription is fully enabled by setting the right schemas before you perform evaluations.

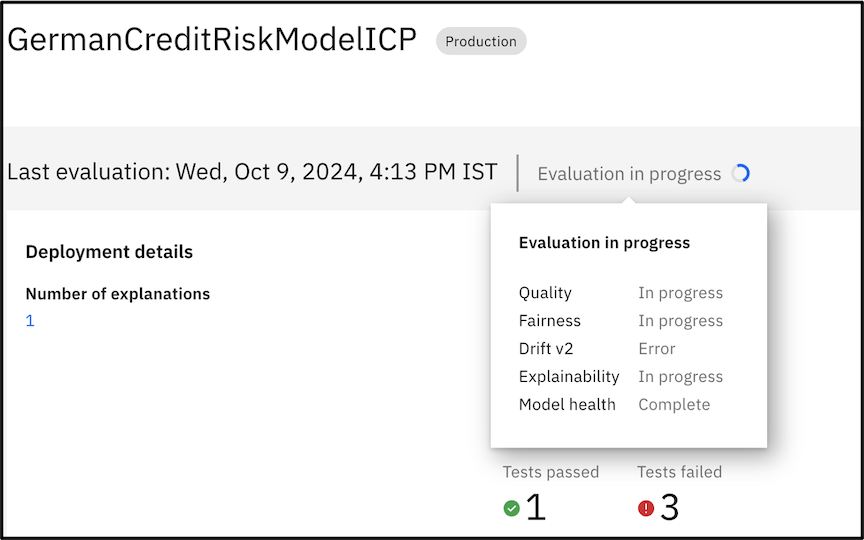

When you run evaluations, you can view progress with a status indicator that displays whether the evaluations complete successfully.

Copy configuration from a pre-production subscription to a production subscription

To save time, you can copy configuration and model metadata from a pre-production subscription and add the data to a production subscription. The model must be an identical match to the source model, but deployed to the production space.

Parent topic: Model risk management and model governance