Model health monitor evaluations

You can configure model health monitor evaluations to help you understand your model behavior and performance. You can use model health metrics to determine how efficiently your model deployment processes your transactions.

When model health evaluations are enabled, a model health data set is created in the data mart. The model health data set stores details about your scoring requests that are used to calculate model health metrics.

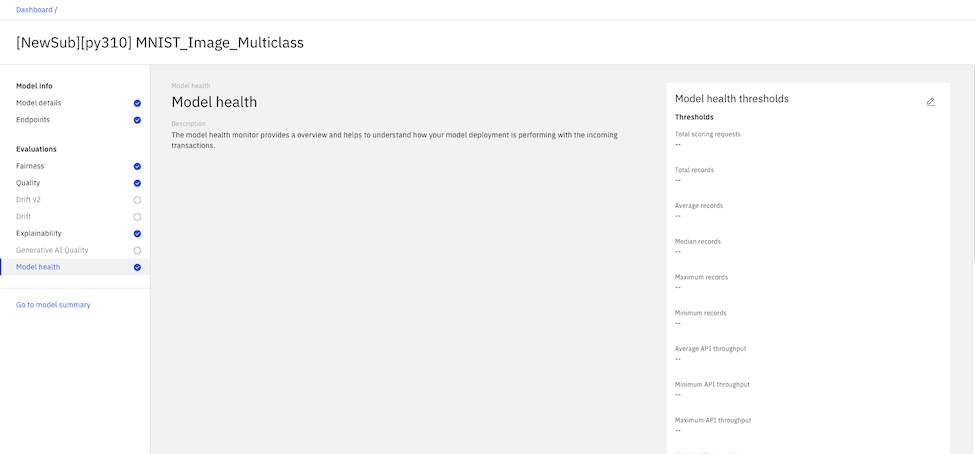

To configure model health monitor evaluations, you can set threshold values for each metric as shown in the following example:

Model health evaluations are not supported for pre-production deployments.

Performance metrics

Use performance monitoring to know the velocity of data records processed by your deployment.You enable performance monitoring when you select the deployment to be tracked and monitored.

Performance metrics are calculated based on the following information:

- scoring payload data

For proper monitoring purpose, log in every scoring request as well. Payload data logging is automated for IBM watsonx.ai Runtime engines. For other machine learning engines, the payload data can be provided either by using the Python client or the REST API. Performance monitoring does not create any additional scoring requests on the monitored deployment.

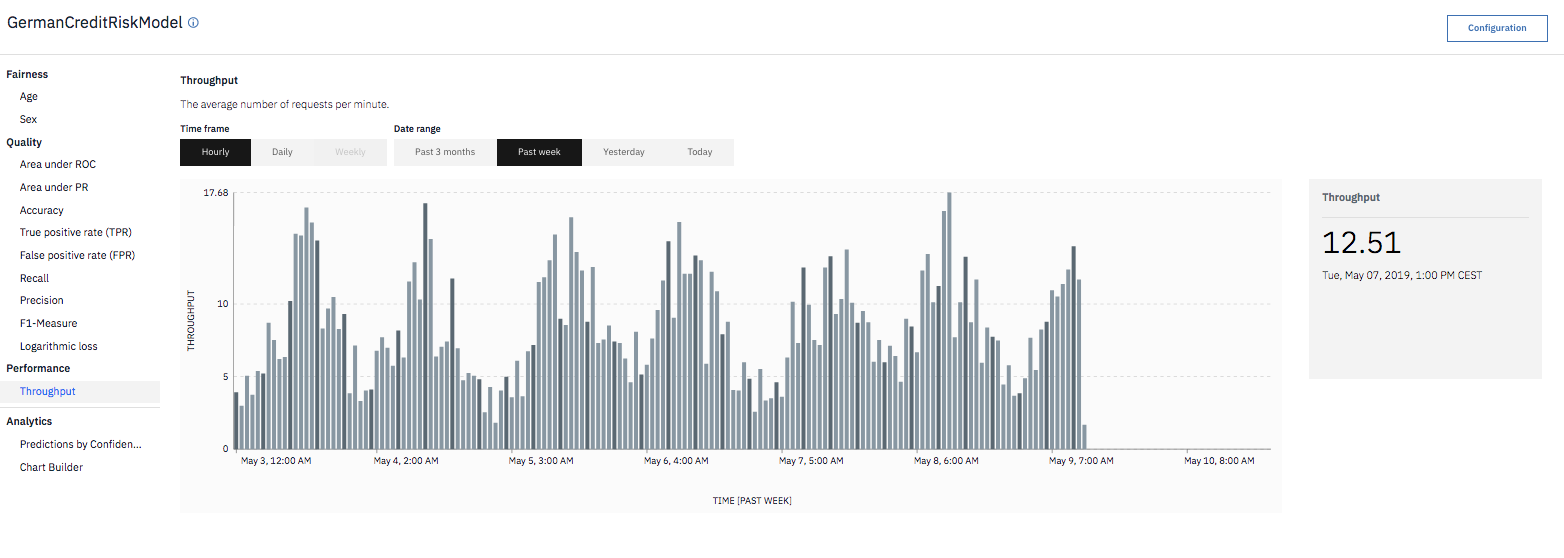

You can review performance metrics value over time on the Insights dashboard:

Supported performance metrics

The following performance metrics are supported:

We use your feedback to improve the product.Terms