You can evaluate prompt templates in projects with watsonx.governance to measure the performance of foundation model tasks and understand how your model generates responses.

With watsonx.governance, you can evaluate prompt templates in projects to measure how effectively your foundation models generate responses for the following task types:

- Classification

- Summarization

- Generation

- Question answering

- Entity extraction

- Retrieval-augmented generation

Before you begin

You must have access to a project to evaluate prompt templates. For more information, see Setting up watsonx.governance.

To run evaluations, you must log in and switch to a watsonx account that has watsonx.governance and watsonx.ai instances that are installed. Then open a project. You must be assigned the Admin or Editor roles for the account to open projects.

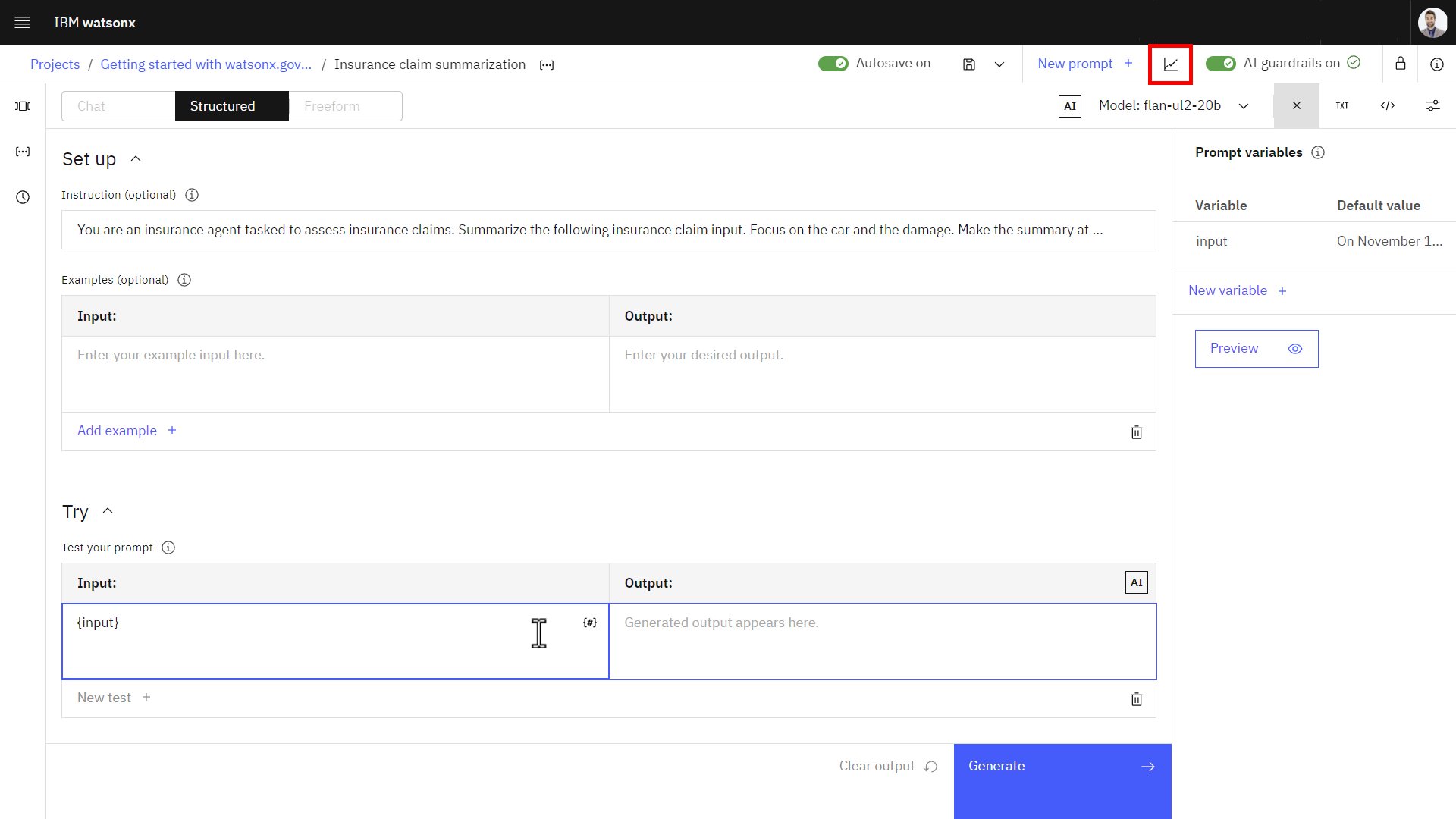

In your project, you must use the watsonx.ai Prompt Lab to create and save a prompt template. You must specify variables when you create prompt templates to enable evaluations. The Try section in the Prompt Lab must contain at least one variable.

Watch this video to see how to evaluate a prompt template in a project.

This video provides a visual method to learn the concepts and tasks in this documentation.

The following sections describe how to evaluate prompt templates in projects and review your evaluation results.

Running evaluations

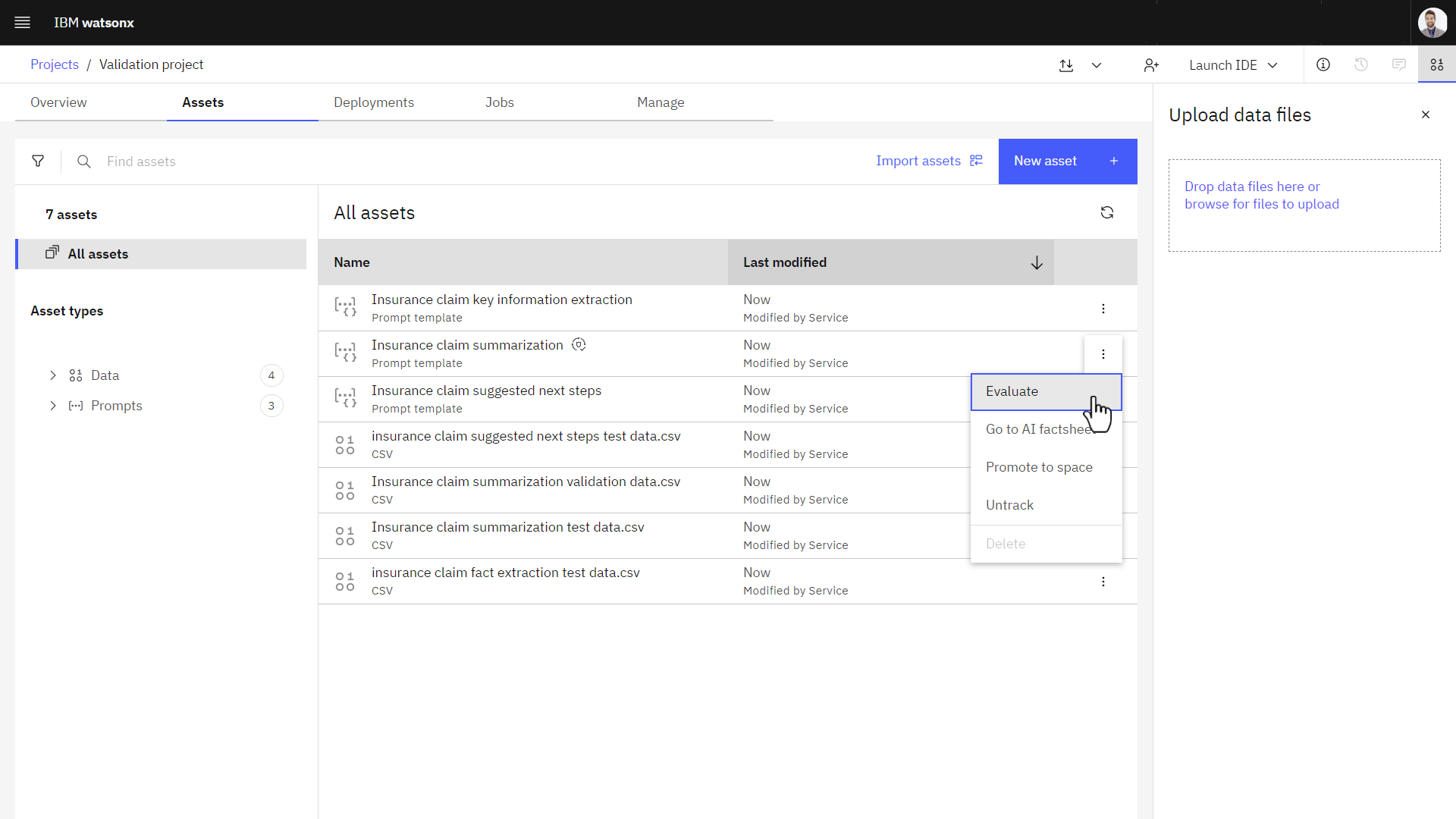

To run prompt template evaluations, you can click Evaluate when you open a saved prompt template on the Assets tab in watsonx.governance to open the Evaluate prompt template wizard. You can run evaluations only if you are assigned the Admin or Editor roles for your project.

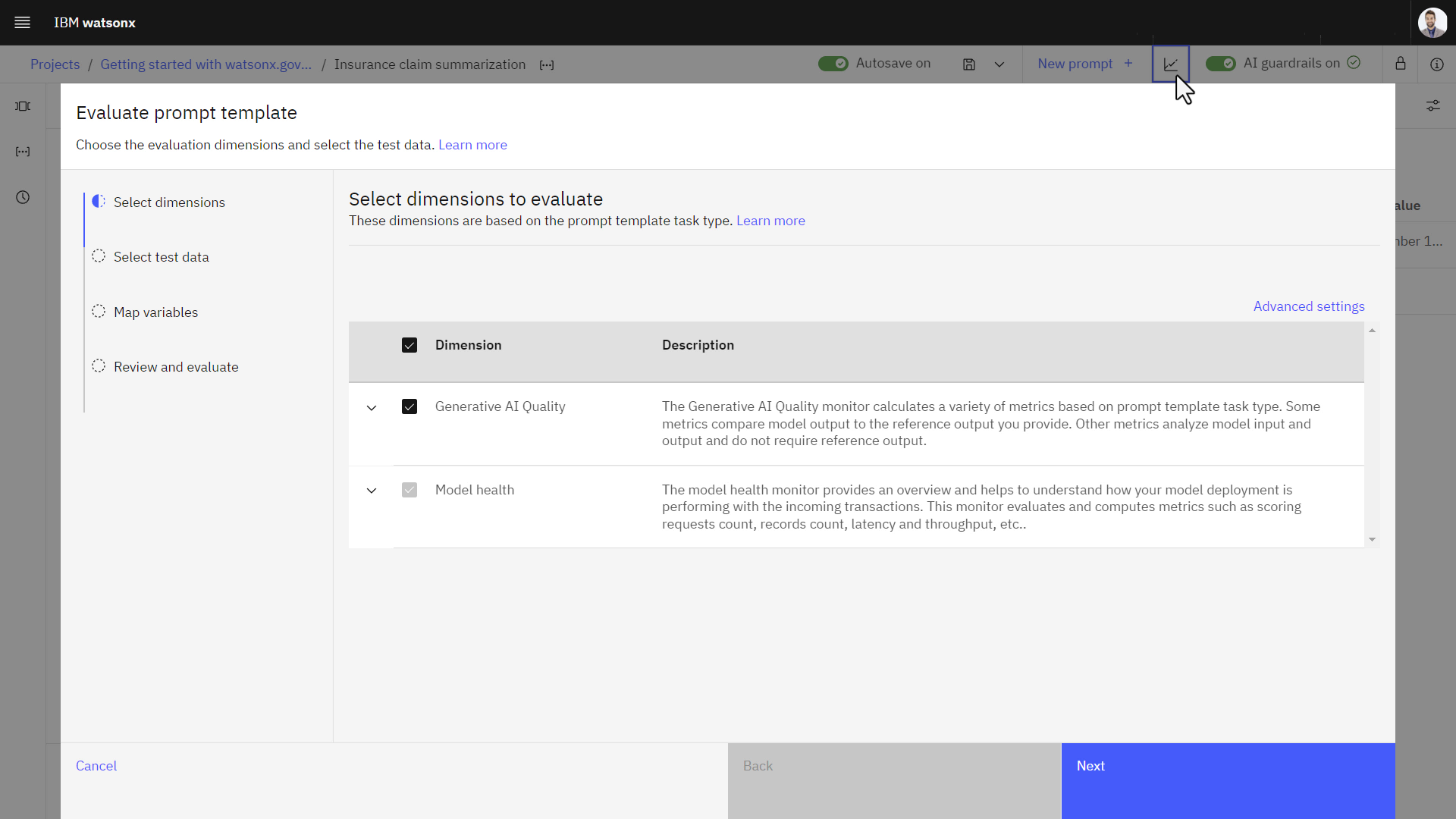

Select dimensions

The Evaluate prompt template wizard displays the dimensions that are available to evaluate for the task type that is associated with your prompt. You can expand the dimensions to view the list of metrics that are used to evaluate the dimensions that you select.

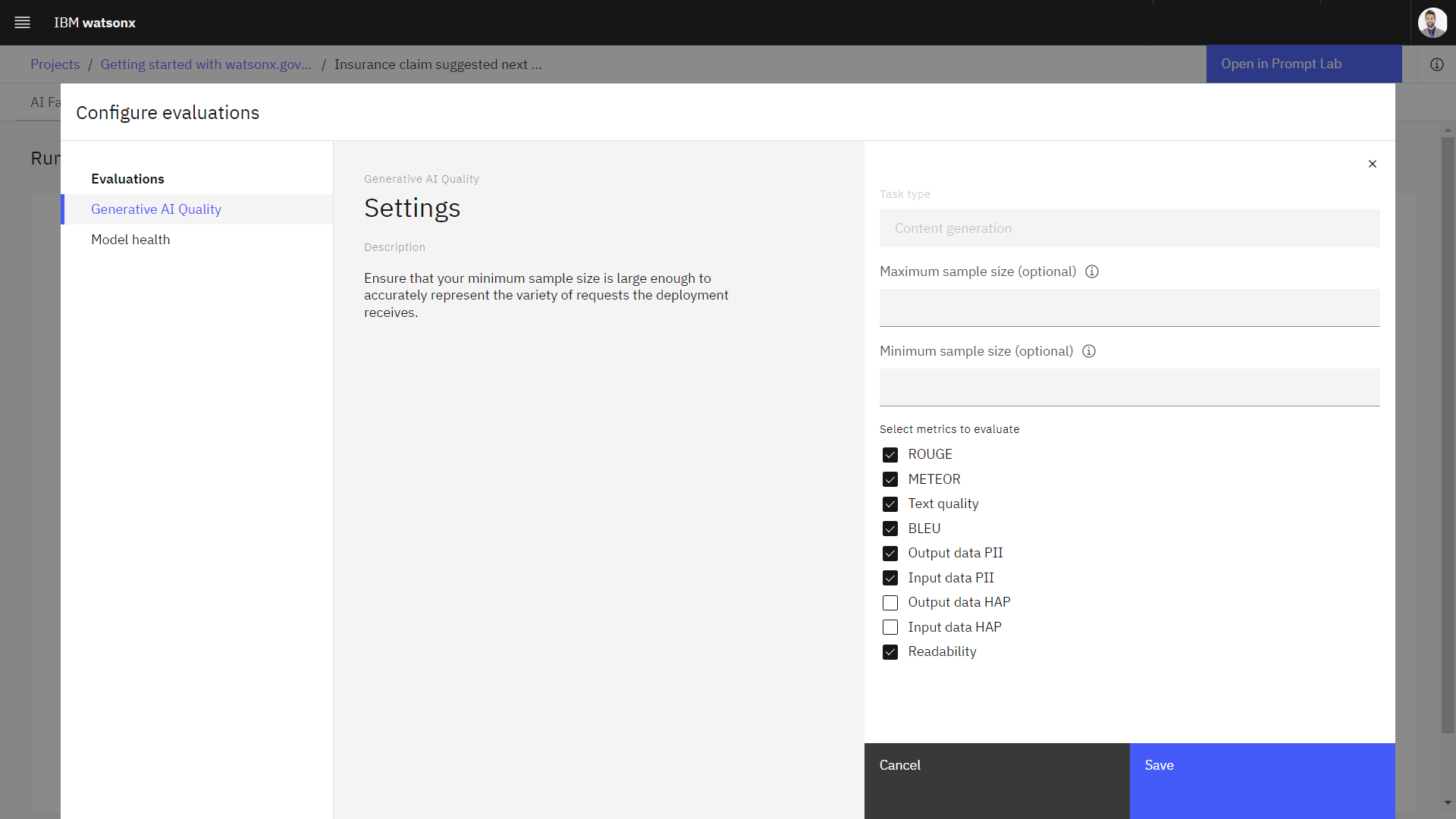

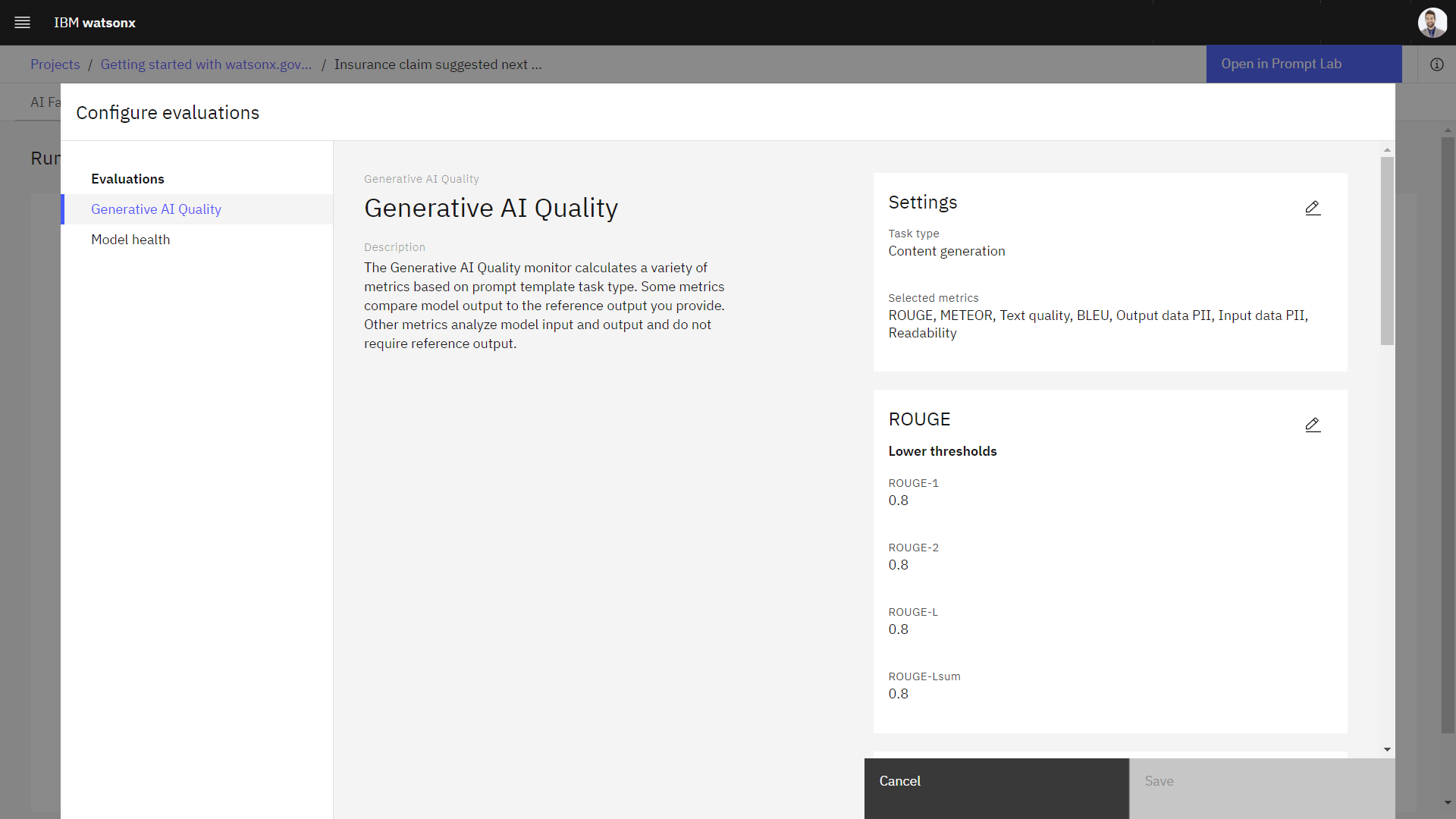

Watsonx.governance automatically configures evaluations for each dimension with default settings. To configure evaluations with different settings, you can select Advanced settings to set sample sizes and select the metrics that you want to use evaluate your prompt template:

You can also set threshold values for each metric that you select for your evaluations:

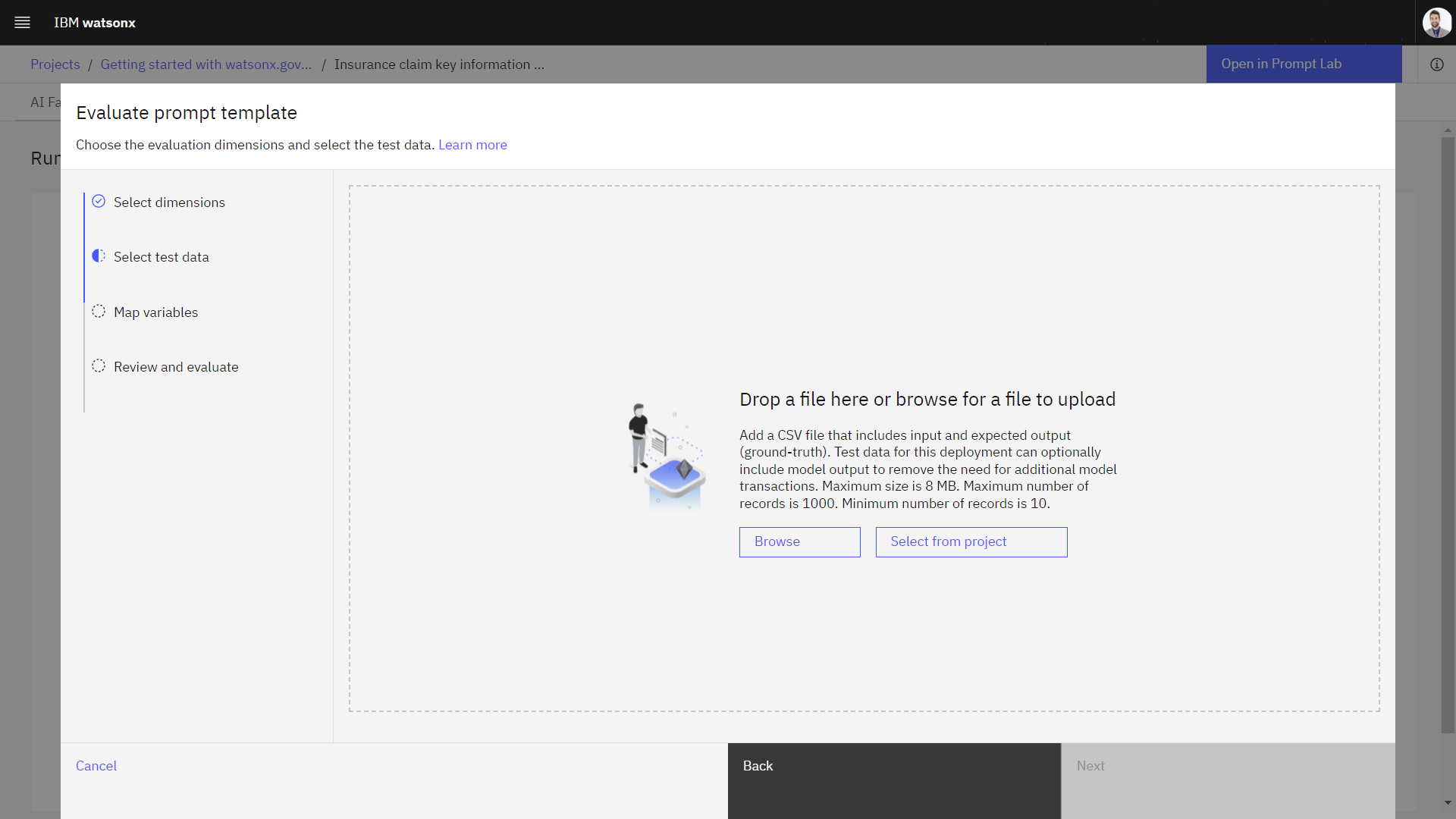

Select test data

To select test data, you can browse to upload a CSV file or you can select an asset from your project. The test data that you select must contain reference columns and columns for each prompt variable.

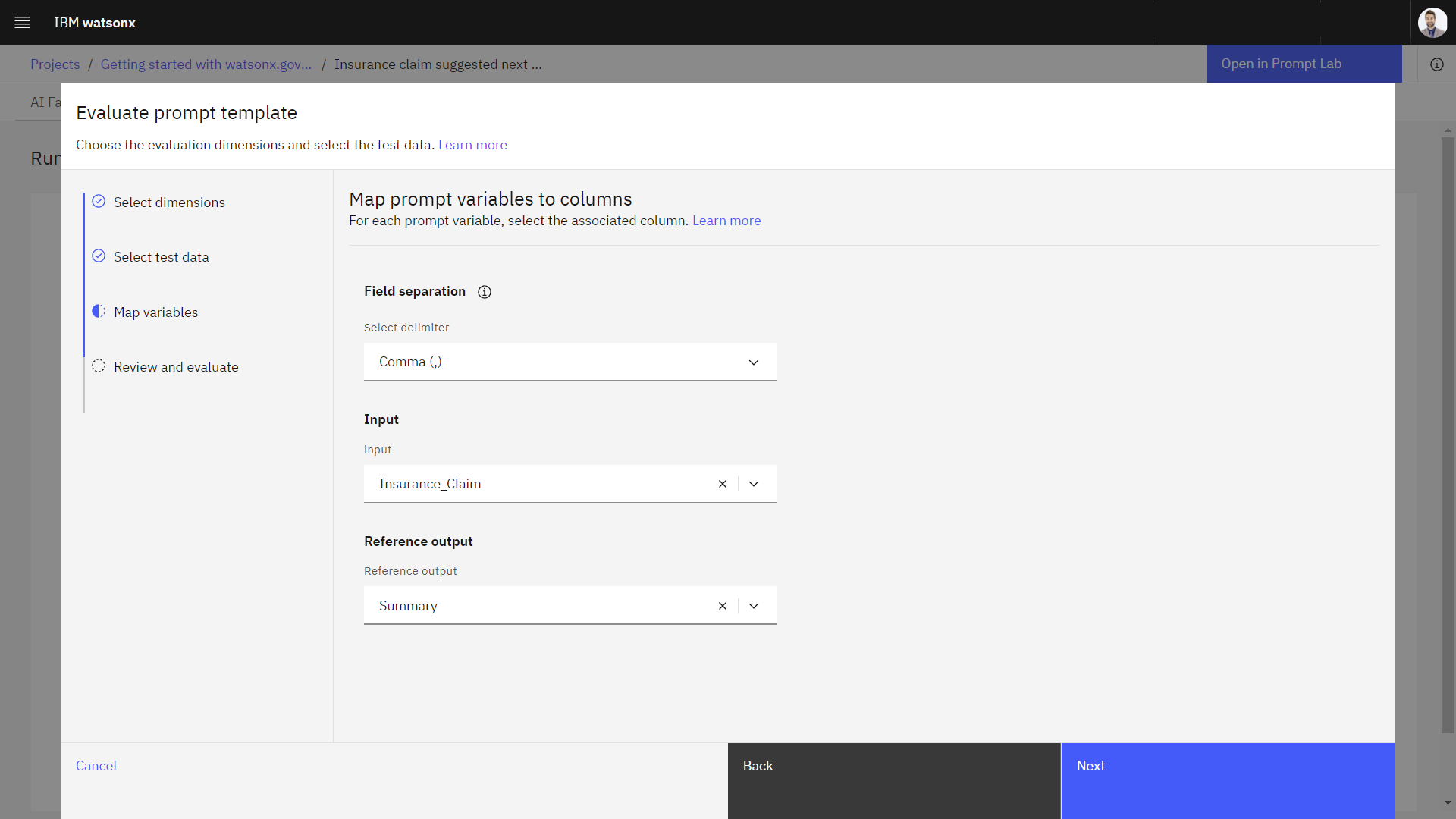

Map variables

You must map prompt variables to the associated columns from your test data.

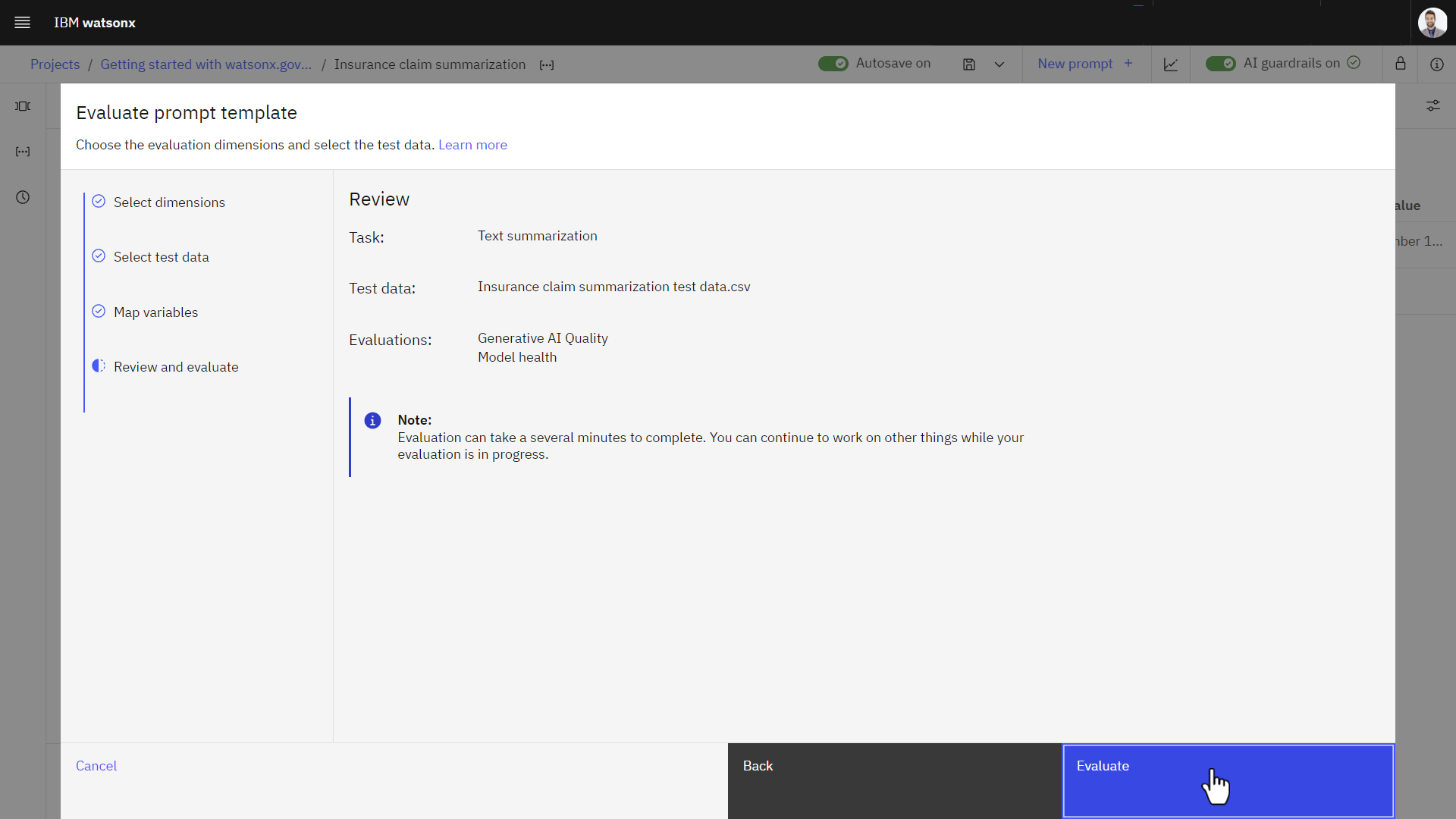

Review and evaluate

Before you run your prompt template evaluation, you can review the selections for the prompt task type, the uploaded test data, and the type of evaluation that runs.

Reviewing evaluation results

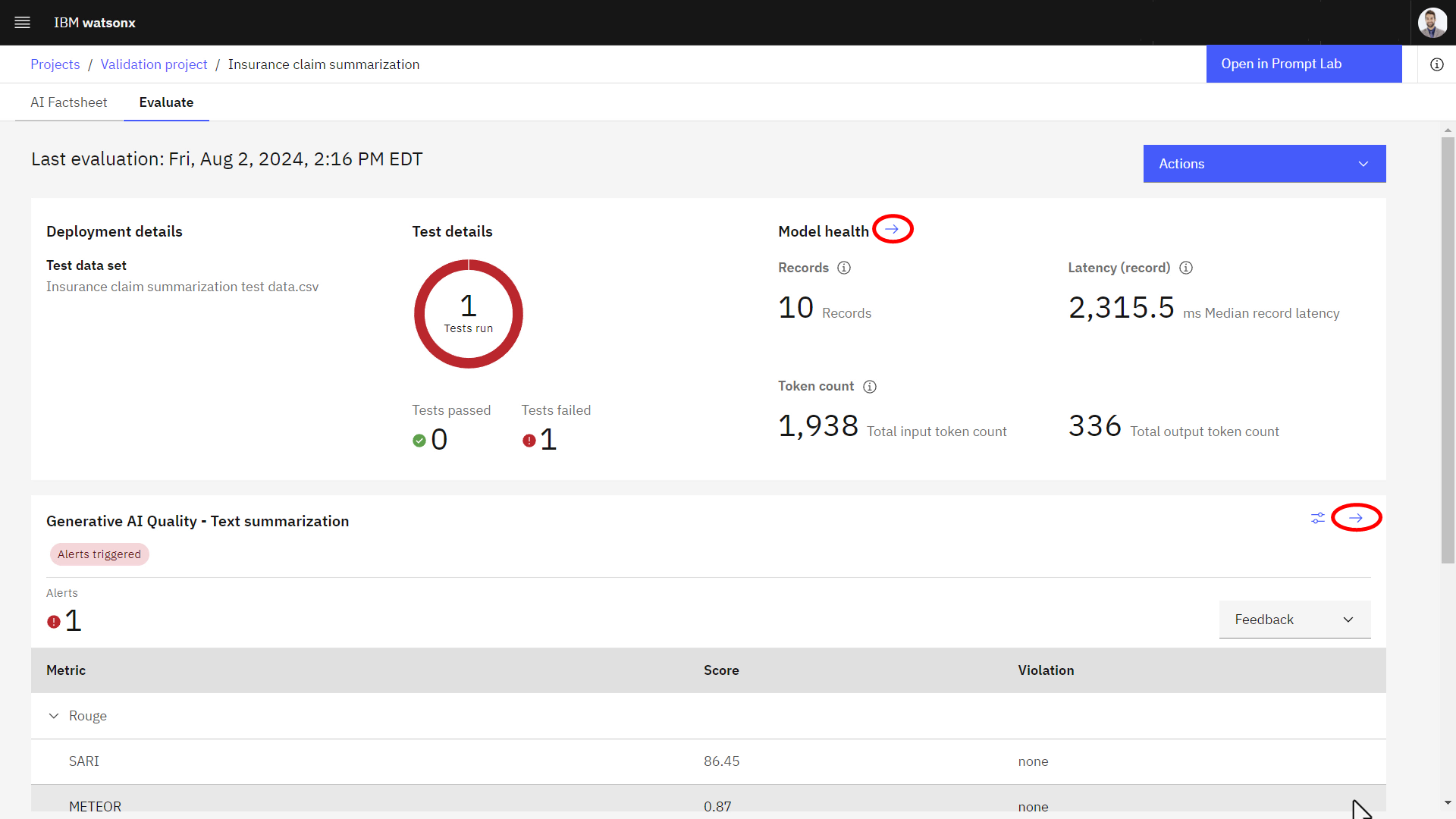

When your evaluation completes, you can review a summary of your evaluation results on the Evaluate tab in watsonx.governance to gain insights about your model performance. The summary provides an overview of metric scores and violations of default score thresholds for your prompt template evaluations.

If you are assigned the Viewer role for your project, you can select Evaluate from the asset list on the Assets tab to view evaluation results.

To analyze results, you can click the arrow  next to your prompt template evaluation to view data visualizations of your results over time. You can also analyze results from the model

health evaluation that is run by default during prompt template evaluations to understand how efficiently your model processes your data.

next to your prompt template evaluation to view data visualizations of your results over time. You can also analyze results from the model

health evaluation that is run by default during prompt template evaluations to understand how efficiently your model processes your data.

The Actions menu also provides the following options to help you analyze your results:

- Evaluate now: Run evaluation with a different test data set

- All evaluations: Display a history of your evaluations to understand how your results change over time.

- Configure monitors: Configure evaluation thresholds and sample sizes.

- View model information: View details about your model to understand how your deployment environment is set up.

If you track prompt templates, you can review evaluation results to gain insights about your model performance throughout the AI lifecycle.

Parent topic: Evaluating AI models.