The following limitations and known issues apply to watsonx.

Data Refinery

If you use Data Refinery, you might encounter these known issues and restrictions when you refine data.

Personal credentials are not supported for connected data assets in Data Refinery

If you create a connected data asset with personal credentials, other users must use the following workaround in order to use the connected data asset in Data Refinery.

Workaround:

- Go to the project page, and click the link for the connected data asset to open the preview.

- Enter credentials.

- Open Data Refinery and use the authenticated connected data asset for a source or target.

Cannot view jobs in Data Refinery flows in the new projects UI

If you're working in the new projects UI, you do not have the option to view jobs from the options menu in Data Refinery flows.

Workaround: In order to view jobs in Data Refinery flows, open a Data Refinery flow, click the Jobs icon ![]() , and select Save and view jobs. You can view a list of all jobs in your project on the Jobs tab.

, and select Save and view jobs. You can view a list of all jobs in your project on the Jobs tab.

Notebook issues

You might encounter some of these issues when getting started with and using notebooks.

Manual installation of some tensor libraries is not supported

Some tensor flow libraries are preinstalled, but if you try to install additional tensor flow libraries yourself, you get an error.

Connection to notebook kernel is taking longer than expected after running a code cell

If you try to reconnect to the kernel and immediately run a code cell (or if the kernel reconnection happened during code execution), the notebook doesn't reconnect to the kernel and no output is displayed for the code cell. You need to manually reconnect to the kernel by clicking Kernel > Reconnect. When the kernel is ready, you can try running the code cell again.

Using the predefined sqlContext object in multiple notebooks causes an error

You might receive an Apache Spark error if you use the predefined sqlContext object in multiple notebooks. Create a new sqlContext object for each notebook. See this Stack Overflow explanation.

Connection failed message

If your kernel stops, your notebook is no longer automatically saved. To save it, click File > Save manually, and you should get a Notebook saved message in the kernel information area, which appears before the Spark version. If you get a message that the kernel failed, to reconnect your notebook to the kernel click Kernel > Reconnect. If nothing you do restarts the kernel and you can't save the notebook, you can download it to save your changes by clicking File > Download as > Notebook (.ipynb). Then you need to create a new notebook based on your downloaded notebook file.

Hyperlinks to notebook sections don't work in preview mode

If your notebook contains sections that you link to from an introductory section at the top of the notebook for example, the links to these sections will not work if the notebook was opened in view-only mode in Firefox. However, if you open the notebook in edit mode, these links will work.

Can't connect to notebook kernel

If you try to run a notebook and you see the message Connecting to Kernel, followed by Connection failed. Reconnecting and finally by a connection failed error message, the reason might be that your firewall is blocking

the notebook from running.

If Watson Studio is installed behind a firewall, you must add the WebSocket connection wss://dataplatform.cloud.ibm.com to the firewall settings. Enabling this WebSocket connection is required when you're using notebooks and RStudio.

Machine learning issues

You might encounter some of these issues when working with machine learning tools.

Region requirements

You can access the Prompt Lab tool and foundation model inferencing only if you provisioned both the Watson Studio and the Watson Machine Learning services in the Dallas region.

You can only associate a Watson Machine Learning service instance with your project when the Watson Machine Learning service instance and the Watson Studio instance are located in the same region.

Accessing links if you create a service instance while associating a service with a project

While you are associating a Watson Machine Learning service to a project, you have the option of creating a new service instance. If you choose to create a new service, the links on the service page might not work. To access the service terms, APIs, and documentation, right click the links to open them in new windows.

Federated Learning assets cannot be searched in All assets, search results, or filter results in the new projects UI

You cannot search Federated Learning assets from the All assets view, the search results, or the filter results of your project.

Workaround: Click the Federated Learning asset to open the tool.

Deployment issues

- A deployment that is inactive (no scores) for a set time (24 hours for the free plan or 120 hours for a paid plan) is automatically hibernated. When a new scoring request is submitted, the deployment is reactivated and the score request is served. Expect a brief delay of 1 to 60 seconds for the first score request after activation, depending on the model framework.

- For some frameworks, such as SPSS modeler, the first score request for a deployed model after hibernation might result in a 504 error. If this happens, submit the request again; subsequent requests should succeed.

AutoAI known limitations

-

Currently, AutoAI experiments do not support double-byte character sets. AutoAI only supports CSV files with ASCII characters. Users must convert any non-ASCII characters in the file name or content, and provide input data as a CSV as defined in this CSV standard.

-

To interact programmatically with an AutoAI model, use the REST API instead of the Python client. The APIs for the Python client required to support AutoAI are not generally available at this time.

Data module not found in IBM Federated Learning

The data handler for IBM Federated Learning is trying to extract a data module from the FL library but is unable to find it. You might see the following error message:

ModuleNotFoundError: No module named 'ibmfl.util.datasets'

The issue possibly results from using an outdated DataHandler. Please review and update your DataHandler to conform to the latest spec. Here is the link to the most recent MNIST data handler or ensure your sample versions are up-to-date.

SPSS Modeler issues

You might encounter some of these issues when working in SPSS Modeler.

SPSS Modeler runtime restrictions

Watson Studio does not include SPSS functionality in Peru, Ecuador, Colombia and Venezuela.

Error when trying to stop a running flow

When running an SPSS Modeler flow, you might encounter an error if you try to stop the flow from the Environments page under your project's Manage tab. To completely stop the SPSS Modeler runtime and CUH consumption, close the browser tabs where you have the flow open.

Imported Data Asset Export nodes sometimes fail to run

When you create a new flow by importing an SPSS Modeler stream (.str file), migrate the export node, and then run the resulting Data Asset Export node, the run may fail. To work around this issue: rerun the node, change the output name and change the If the data set already exists option in the node properties, then run the node again.

Data preview may fail if table metadata has changed

In some cases, when using the Data Asset import node to import data from a connection, data preview may return an error if the underlying table metadata (data model) has changed. Recreate the Data Asset node to resolve the issue.

Unable to view output after running an Extension Output node

When running an Extension Output node with the Output to file option selected, the resulting output file returns an error when you try to open it from the Outputs panel.

Unable to preview Excel data from IBM Cloud Object Storage connections

Currently, you can't preview .xls or .xlsx data from a IBM Cloud Object Storage connection.

Numbers interpreted as a string

Any number with a precision larger or equal to 32 and a scale equal to 0 will be interpreted as a string. If you need to change this behavior, you can use a Filler node to cast the field to a real number instead by using the expression to_real(@FIELD).

SuperNode containing Import nodes

If your flow has a SuperNode that contains an Import node, the input schema may not be set correctly when you save the model with the Scoring branch option. To work around this issue, expand the SuperNode before saving.

Exporting to a SAV file

When using the Data Asset Export node to export to an SPSS Statistics SAV file (.sav), the Replace data asset option won't work if the input schema doesn't match the output schema. The schema of the existing file you want to replace must match.

Migrating Import nodes

If you import a stream (.str) to your flow that was created in SPSS Modeler desktop and contains one or more unsupported Import nodes, you'll be prompted to migrate the Import nodes to data assets. If the stream contains multiple Import nodes that use the same data file, then you must first add that file to your project as a data asset before migrating because the migration can't upload the same file to more than one Import node. After adding the data asset to your project, reopen the flow and proceed with the migration using the new data asset.

Text Analytics settings aren't saved

After closing the Text Analytics Workbench, any filter settings or category build settings you modified aren't saved to the node as they should be.

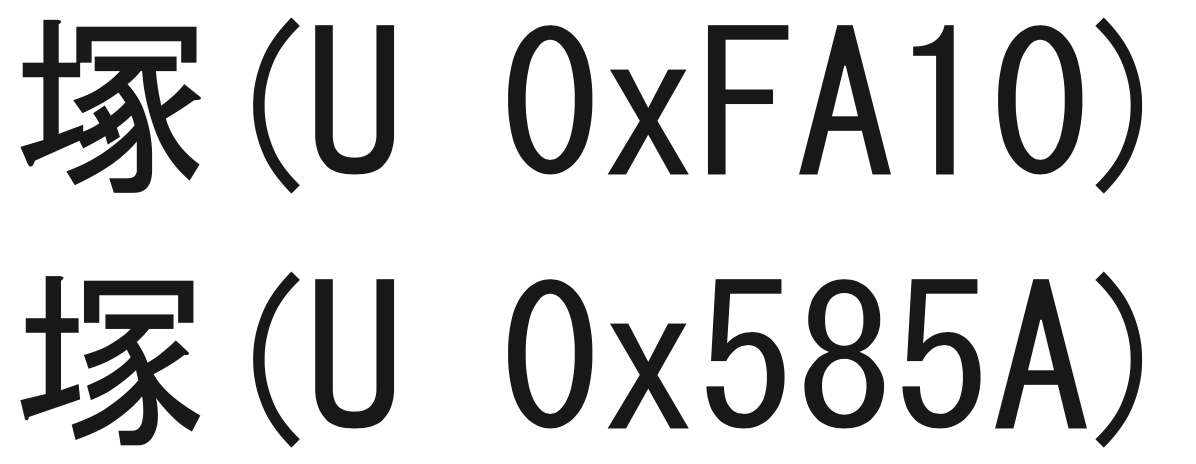

Merge node and unicode characters

The Merge node treats the following very similar Japanese characters as the same character.

Watson Pipelines known issues

The issues pertain to Watson Pipelines.

Nesting loops more than 2 levels can result in pipeline error

Nesting loops more than 2 levels can result in an error when you run the pipeline, such as Error retrieving the run. Reviewing the logs can show an error such as text in text not resolved: neither pipeline_input nor node_output.

If you are looping with output from a Bash script, the log might list an error like this: PipelineLoop can't be run; it has an invalid spec: non-existent variable in $(params.run-bash-script-standard-output). To resolve the

problem, do not nest loops more than 2 levels.

Asset browser does not always reflect count for total numbers of asset type

When selecting an asset from the asset browser, such as choosing a source for a Copy node, you see that some of the assets list the total number of that asset type available, but notebooks do not. That is a current limitation.

Cannot delete pipeline versions

Currently, you cannot delete saved versions of pipelines that you no longer need.

Deleting an AutoAI experiment fails under some conditions

Using a Delete AutoAI experiment node to delete an AutoAI experiment that was created from the Projects UI does not delete the AutoAI asset. However, the rest of the flow can complete successfully.

Cache appears enabled but is not enabled

If the Copy assets Pipelines node's Copy mode is set to Overwrite, cache is displayed as enabled but remains disabled.

Watson Pipelines limitations

These limitations apply to Watson Pipelines.

- Single pipeline limits

- Limitations by configuration size

- Input and output size limits

- Batch input limited to data assets

Single pipeline limits

These limitation apply to a single pipeline, regardless of configuration.

- Any single pipeline cannot contain more than 120 standard nodes

- Any pipeline with a loop cannot contain more than 600 nodes across all iterations (for example, 60 iterations - 10 nodes each)

Limitations by configuration size

Small configuration

A SMALL configuration supports 600 standard nodes (across all active pipelines) or 300 nodes run in a loop. For example:

- 30 standard pipelines with 20 nodes run in parallel = 600 standard nodes

- 3 pipelines containing a loop with 10 iterations and 10 nodes in each iteration = 300 nodes in a loop

Medium configuration

A MEDIUM configuration supports 1200 standard nodes (across all active pipelines) or 600 nodes run in a loop. For example:

- 30 standard pipelines with 40 nodes run in parallel = 1200 standard nodes

- 6 pipelines containing a loop with 10 iterations and 10 nodes in each iteration = 600 nodes in a loop

Large configuration

A LARGE configuration supports 4800 standard nodes (across all active pipelines) or 2400 nodes run in a loop. For example:

- 80 standard pipelines with 60 nodes run in parallel = 4800 standard nodes

- 24 pipelines containing a loop with 10 iterations and 10 nodes in each iteration = 2400 nodes in a loop

Input and output size limits

Input and output values, which include pipeline parameters, user variables, and generic node inputs and outputs, cannot exceed 10 KB of data.

Batch input limited to data assets

Currently, input for batch deployment jobs is limited to data assets. This means that certain types of deployments, which require JSON input or multiple files as input, are not supported. For example, SPSS models and Decision Optimization solutions that require multiple files as input are not supported.