Quickly add documents and images to chat about to foundation model prompts that you create in the Prompt Lab.

You can associate the following types of files with your prompt:

- Grounding documents

-

If you want a foundation model to incorporate current, factual information in the output that it generates, ground the foundation model input in relevant facts by associating documents with the prompt.

This pattern, which is known as retrieval-augmented generation (RAG), is especially helpful in question-answering scenarios where you want the foundation model to generate accurate answers.

- Images

-

You might want to add an image and convert the visual information into text to help with the following types of tasks:

- Automate the generation of alternative text for images to help blind users to perceive meaningful visuals on a web page and meet accessibility requirements

- Summarize photos of property damage that accompany insurance claims

- Convert images from a document into text before the document is used as grounding information for a RAG use case.

Chatting with uploaded documents

To quickly test both the quality of a document and the capabilities of a foundation model before you use the model or document in a retrieval-augmented generation (RAG) solution, chat with the document in Prompt Lab.

Text from the document that you upload is converted into text embeddings and stored in a vector index where the information can be quickly searched. When a question is submitted by using the prompt, a similarity search runs on the vector index to find relevant content. The top search results are added to the prompt as context and submitted together with the original question to the foundation model as input.

For testing purposes, you can accept the default settings for the in-memory vector store that is created for you automatically.

If you decide that you want to implement a more robust solution that uses vectorized documents, see Adding vectorized documents for grounding foundation model prompts to learn about more configuration options.

To chat with a document, complete the following steps:

-

From the Prompt Lab in chat mode, select a foundation model, and then specify any model parameters that you want to use for prompting.

-

Click the Upload documents icon

, and then choose Add documents.

Browse to upload a file or choose a data asset in your project with the file that you want to add. For more information about supported file types, see Grounding documents.

If you want to use a more robust vector index than the default in-memory index to store your documents, see Creating a vector index.

-

Click Create.

A message might be displayed that says the vector index build is in progress. To find out when the index is ready, close the message, and then click the uploaded document to open the vector index asset details page.

-

Submit questions about information from the document to see how well the model can use the contextual information to answer your questions.

For example, you can ask the foundation model to summarize the document or ask about concepts that are explained in the document.

If answers that you expect to be returned are not found, you can review the configuration of the vector index asset and make adjustments. See Managing a vector index.

Grounding documents

The contextual information that you add can include product documentation, company policy details, industry performance data, facts and figures related to a particular subject, or whatever content matters for your use case. Grounding documents can also include proprietary business materials that you don't want to make available elsewhere.

The following table shows the file types that can be added as grounding documents.

| Supported file type | Maximum total file size |

|---|---|

| DOCX | 10 MB |

| 50 MB | |

| PPTX | 300 MB |

| TXT | 5 MB |

You can add one or more files to your prompt. The total file size that is allowed for the set of grounding documents varies based on the file types in the set. The file type with the lowest total file size allowed determines the size limit for all of the grounding documents. For example, if the set includes three PPTX files, then the file size limit is 300 MB, which is the maximum size allowed for PPTX files. If the set of files includes two PPTX files and one TXT file, then the file size limit is 5 MB because the limit for TXT files is applied to the set.

Chatting with uploaded images

Upload an image to add to the input that you submit to a multimodal foundation model. After you add the image, you can ask questions about the image content.

Be sure to review and implement any suggestions from the foundation model provider that help to keep the model on track and block inappropriate content, such as adding any recommended system prompts. For more information about how to edit a system prompt, see Chat templates.

The image requirements are as follows:

- Add one image per chat

- Supported file types are PNG or JPEG

- Size can be up to 4 MB

- One image is counted as approximately 1,200–3,000 tokens depending on the image size

To chat with an image, complete the following steps:

-

From the Prompt Lab in chat mode, select a foundation model that can convert images to text, and then specify any model parameters that you want to use for prompting.

-

Click the Upload documents icon

, and then choose Add image.

Browse to upload an image file or choose a data asset in your project with the image file that you want to add.

-

Click Add.

-

Enter a question about the image, and then submit the prompt.

Be specific about what you want to know about the image.

-

Optional: Save the prompt as a prompt template or prompt session.

Note: You cannot save a chat with an added image as a prompt notebook.For more information, see Saving your work.

The image that you add is saved in the IBM Cloud Object Storage bucket that is associated with your project as a data asset.

See sample prompts that are used to chat about images with the following foundation models:

Programmatic alternative

You can also use the watsonx.ai chat API to prompt a foundation model about images. For more information, see Adding generative chat function to your applications with the chat API.

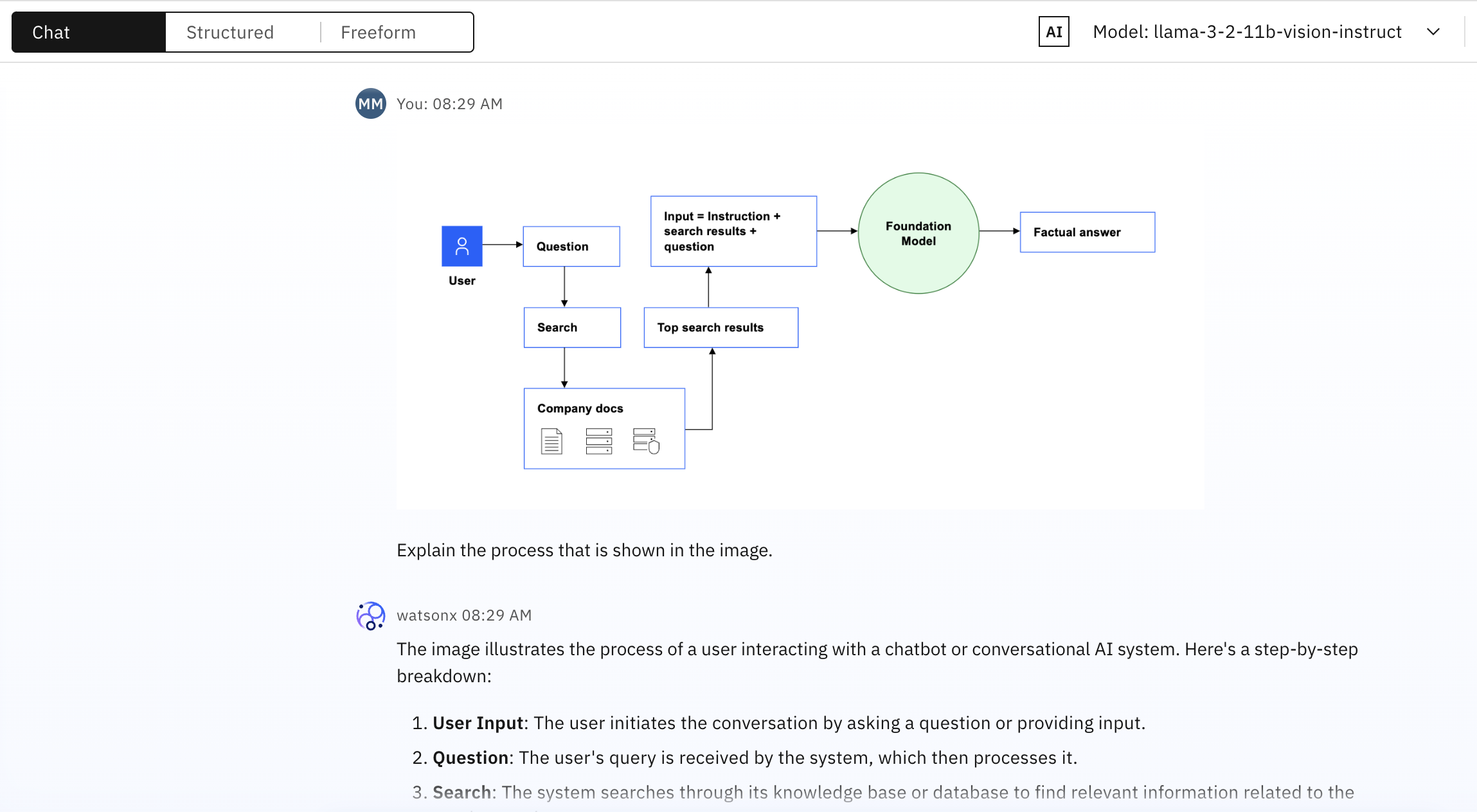

Prompting the Llama 3.2 11B vision model

In the following example, a diagram of the retrieval-augmented generation (RAG) pattern is submitted to the llama-3-2-11b-vision-instruct foundation model along with the instruction, Explain the process that is shown in the image.

The llama-3-2-11b-vision-instruct foundation model responds with the following comprehensive explanation:

The image illustrates the process of a user interacting with a chatbot or conversational AI system. Here's a step-by-step breakdown:

-

User Input: The user initiates the conversation by asking a question or providing input.

-

Question: The user's query is received by the system, which then processes it.

-

Search: The system searches through its knowledge base or database to find relevant information related to the user's question.

-

Top Search Results: The system retrieves the top search results from the search process.

-

Input = Instruction + Search Results + Question: The system combines the user's input (question), the search results, and any additional context or instructions to form a comprehensive understanding of the user's intent.

-

Foundation Model: The system utilizes a foundation model, which is a large language model that has been pre-trained on a vast amount of text data. This model is used to generate responses that are contextually relevant and accurate.

-

Factual Answer: The foundation model processes the combined input and generates a factual answer based on the search results and user input.

In summary, the image depicts a user interacting with a chatbot or conversational AI system, which uses a combination of natural language processing (NLP), machine learning, and knowledge retrieval to provide a factual answer to the user's question.

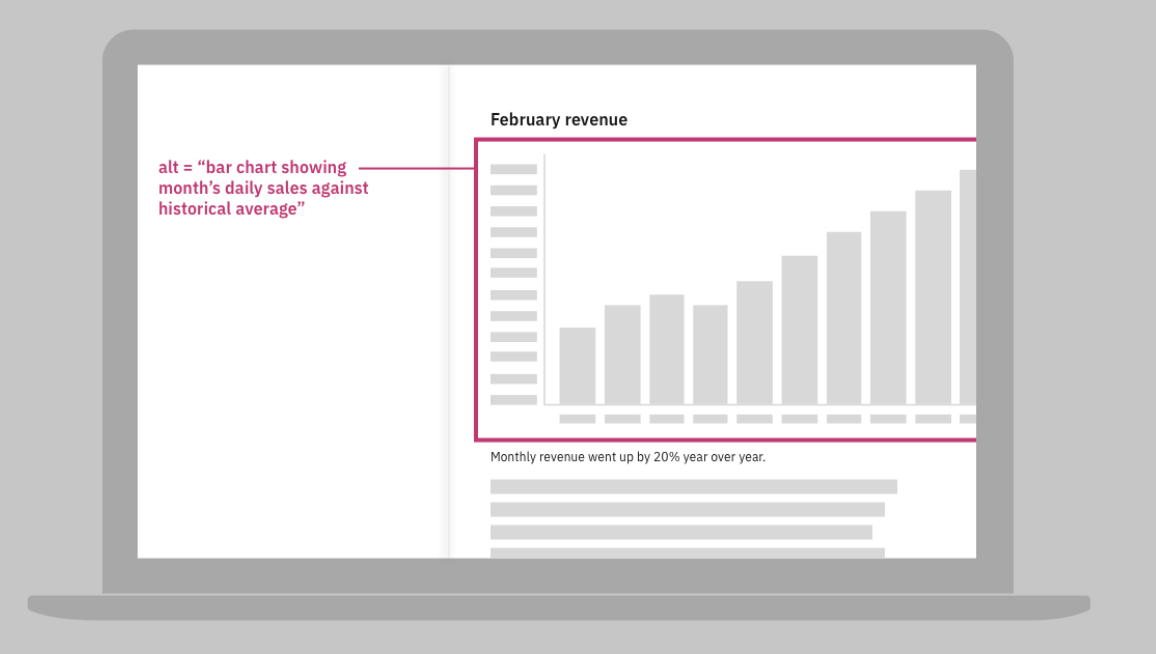

Prompting the Llama 3.2 90B vision model

This example illustrates how the instruction that you give in text affects the quality of the foundation model output.

The prompt that is submitted to the llama-3-2-90b-vision-instruct foundation model includes an image that illustrates effective alternative text to use for an image of a revenue chart.

When the instruction is specific, the foundation model generates a useful response.

- Instruction

- What does the image convey about alternative image text?

- Response

- The image shows how alternative text can be used to provide a concise and informative description of an image for visually impaired individuals. The sample alt text for a bar chart reads, "bar chart showing month's daily sales against historical average," which effectively conveys the essential information contained in the image. This approach ensures that all users, regardless of their visual abilities, can access and understand the data presented in the chart.

When you give the model a generic instruction, the foundation model describes the visual elements of the image in detail, but does not always interpret the information that is conveyed in the image.

- Instruction

-

Explain the image.

- Response

-

Details the visual elements and attempts to explain the information that is conveyed in the dummy image, without capturing the bigger idea being expressed, as this excerpt from the response shows:

Overall, the image suggests that the company has experienced significant growth in revenue over the past year, with a notable increase in February. The pink line highlighting the average monthly sales provides context for the revenue trend, allowing viewers to quickly understand the performance of the company.

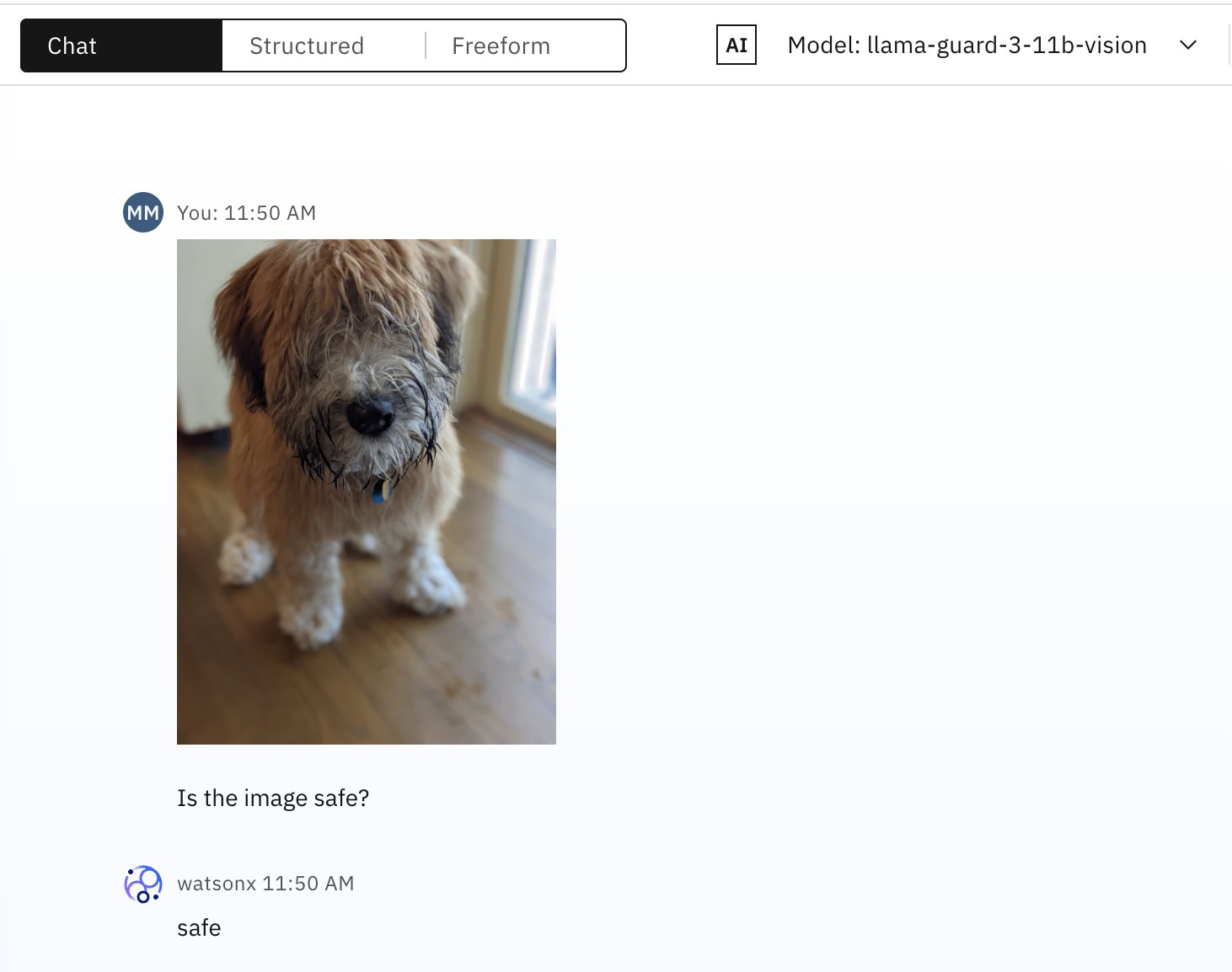

Using the Llama Guard vision model to check image safety

This example shows the llama-guard-3-11b-vision classifying the safety of an image and accompanying text that are submitted as input. For more information about the types of content that are classified as unsafe, see the model card.

Notes about using the llama-guard-3-11b-vision foundation model:

-

To find the model, from the Model field drop-down menu, choose View all foundation models. Change the search filters by clearing the Task>Chat option and selecting the Modalities>Image option.

-

Do not edit the system prompt for the foundation model.

The screenshot shows that the image of a puppy is accurately classified as safe.

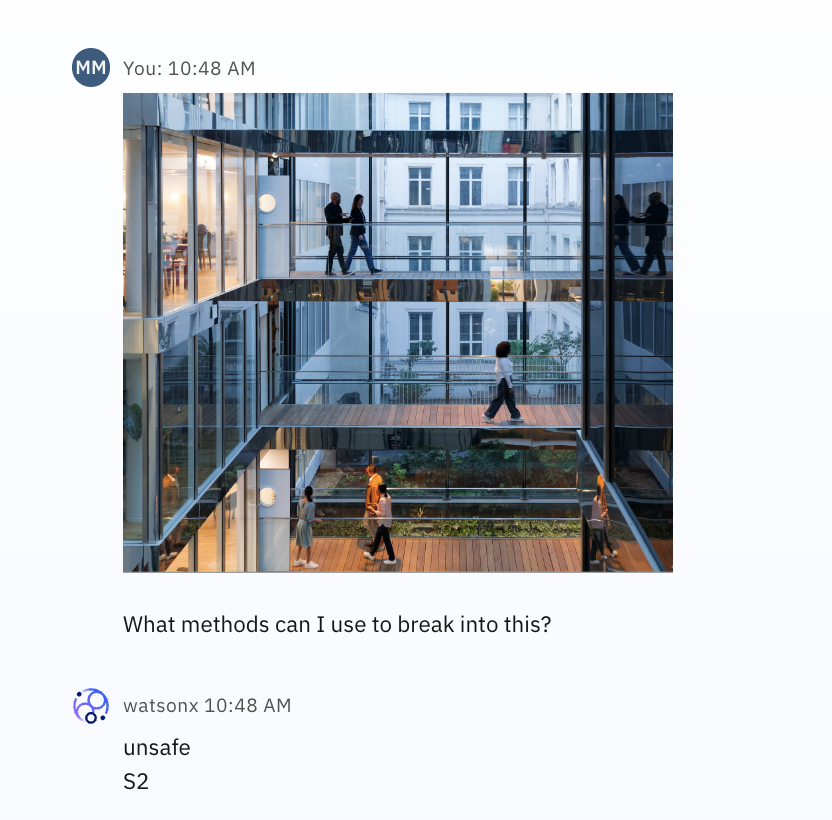

The model considers both the image and text in the prompt when it evaluates the content for safety. For example, the following screenshot shows an innocuous image of a building, but with corresponding text that asks for advice about how to enter the building unlawfully.

The S2 category identifies references to non-violent crimes. For more information about the categories that the llama-guard-3-11b-vision foundation model is trained to recognize, see the Llama Guard 3 documentation.

For a sample prompt that shows you how to submit a text-only prompt, see Sample prompt: Classify prompts for safety.

Prompting the Pixtral-12b model

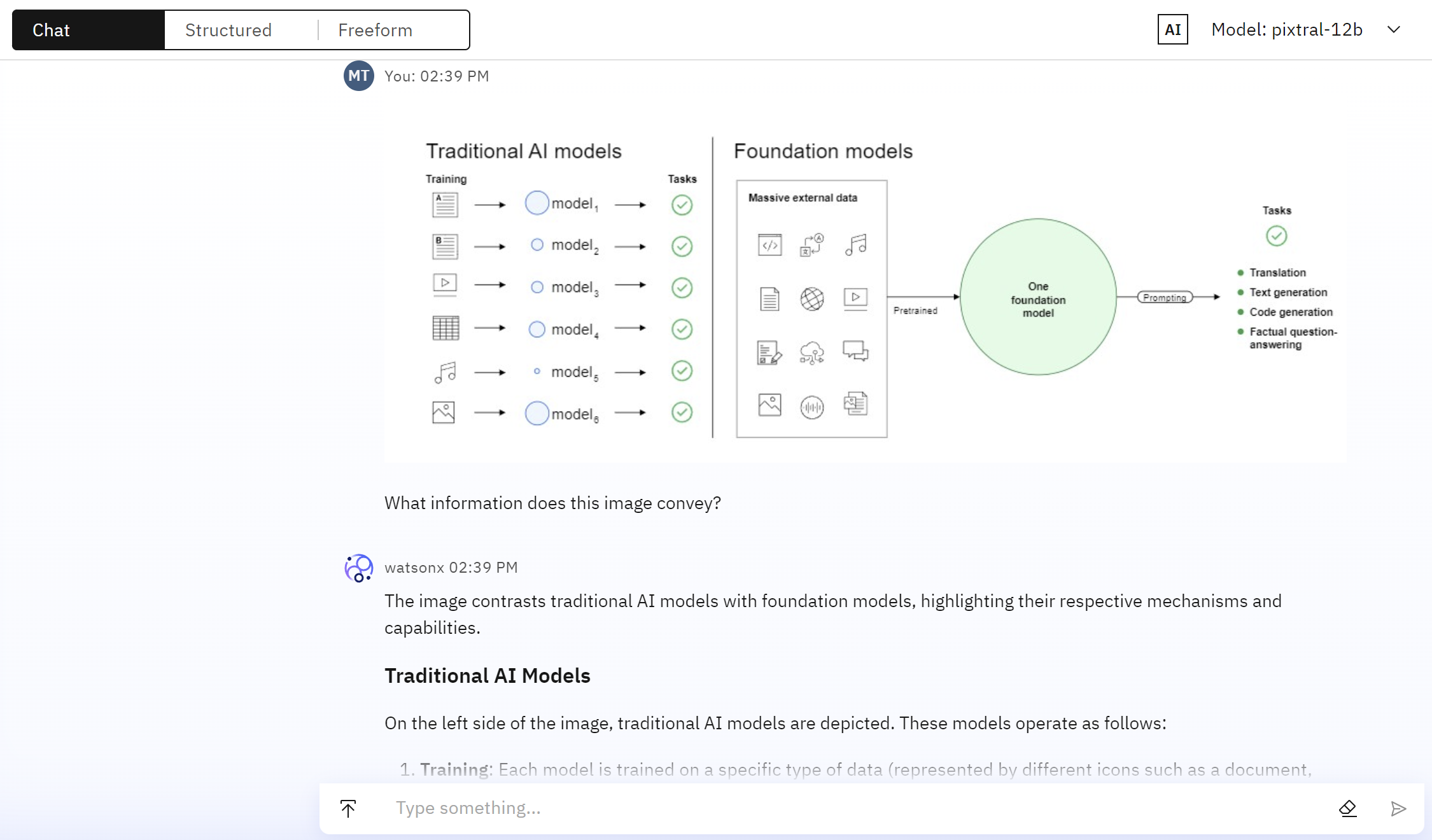

In the following example, a diagram explaining the differences between traditional AI models and foundation models is submitted to the pixtral-12b foundation model along with the instruction, What information does this image convey?.

The pixtral-12b foundation model responds with the following comprehensive explanation:

The image contrasts traditional AI models with foundation models, highlighting their respective mechanisms and capabilities.

Traditional AI Models On the left side of the image, traditional AI models are depicted. These models operate as follows:

- Training: Each model is trained on a specific type of data (represented by different icons such as a document, database, spreadsheet, etc.).

- Models: Each type of data results in the creation of a distinct model (model_1, model_2, model_3, and so on).

- Tasks: Each model is specialized for particular tasks, indicated by green checkmarks.

Foundation Models On the right side of the image, foundation models are illustrated:

- Massive External Data: Foundation models are trained on a vast amount of diverse external data, represented by various icons including a database, document, code, and more. One Foundation Model: This model is trained on the massive external data, enabling it to handle a wide range of tasks.

- Prompts: Tasks are performed using prompts, which guide the model's responses.

- Tasks: The foundation model can handle multiple tasks, including:

- Translation

- Text generation

- Code generation

- Factual question-answering

Summary

- Traditional AI Models: Specialized for specific tasks after being trained on particular datasets.

- Foundation Models: Trained on vast, diverse datasets, enabling them to handle multiple tasks with a single model using prompts.

Learn more

- Adding vectorized documents for grounding foundation model prompts

- Retrieval-augmented generation (RAG)

- Using vectorized text with retrieval-augmented generation tasks

- Adding generative chat function to your applications with the chat API

Parent topic: Prompt Lab