Experiment with inferencing the IBM granite-7b-lab foundation model in watsonx.ai to learn about its capabilities and find areas where you might want to contribute skills or knowledge.

The granite-7b-lab foundation model is a 7 billion parameter large language model from IBM.

Like other foundation models in the IBM Granite series of foundation models, the model is pretrained on enterprise-specialized datasets, which means the model is familiar with specialized language from various industries and can more readily generate content that is grounded in relevant industry knowledge.

Unlike some of the foundation models in the IBM Granite series, the granite-7b-lab foundation model was tuned by using a novel alignment method from IBM Research called Large-scale Alignment for chatBots (LAB). For more information about the LAB alignment tuning method, see InstructLab foundation models.

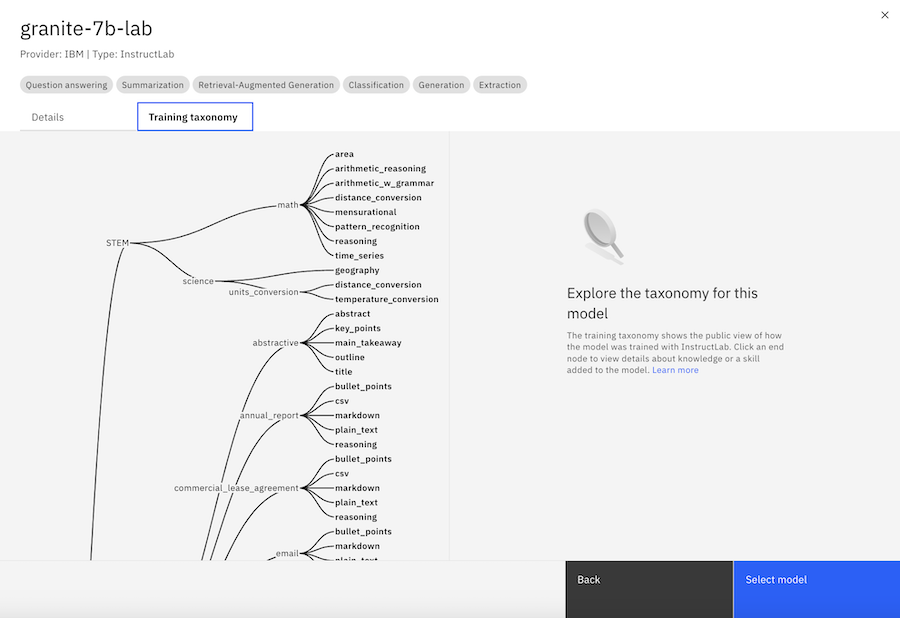

Explore the foundation model taxonomy

You can learn more about the capabilities of the granite-7b-lab foundation model by exploring the foundation model's taxonomy.

To access the taxonomy, complete the following steps:

-

From the watsonx.ai Prompt Lab in chat mode, click the the Model field, and then choose View all foundation models.

-

Find and click the tile for the

granite-7b-labfoundation model. -

Click to open the Training taxonomy tab of the model card.

-

Explore the knowledge and skills in the taxonomy. Each end node of the taxonomy tree represents a skill that the model was trained to do. You can click a skill to see the seed examples that were used to generate the synthetic data that was used to train the model.

Prompting the granite-7b-lab foundation model

To get the best results from the granite-7b-lab foundation model, start by using recommended prompt structures when you craft foundation model input for different tasks. As you work with the model, you can tweak the model parameters and prompt format to find the best settings for your use case.

The granite-7b-lab foundation model is optimized for the following uses:

Classification

To use the granite-7b-lab foundation model to classify information, follow these recommendations.

The following table lists the recommended model parameters for prompting the granite-7b-lab foundation model for a classification task.

| Parameter | Recommended value or range | Explanation |

|---|---|---|

| Decoding | Greedy | Greedy decoding chooses tokens from only the most-probable options, which is best when you want to classify text. |

| Repetition penalty | 1 | Use the lowest value. Repetition is expected. |

| Max tokens | varies | Use a value that covers the number of tokens in your longest class label, such as 3 or 5. Limiting the tokens encourages the model to return only the appropriate class label and nothing else. |

| Stopping criteria | <|endoftext|> | Without the <|endoftext|> stop sequence, the model might generate more output than the class label. |

To prompt the granite-7b-lab foundation model for a classification task, try these steps:

-

Identify the classes or classification labels that you want the model to assign to the input. Be sure to list these class labels in the instruction segment of your prompt.

For example, if you want to classify customer product reviews as positive or negative, you might define two class labels:

PostitiveandNegative. -

Collect two or three representative examples of the type of input text that you want the model to classify.

-

Work with the granite-7b-lab foundation model from the Prompt Lab in freeform mode so that you can structure your prompts.

-

From the Model parameters panel, set the recommended model parameters from Table 1.

-

In your prompt, clearly identify the system prompt, user input, and where the model's output should go.

For example, the following prompt structure was used when the granite-7b-lab foundation model was trained to classify text:

<|system|> You are an AI language model developed by IBM Research. You are a cautious assistant. You carefully follow instructions. You are helpful and harmless and you follow ethical guidelines and promote positive behavior. <|user|> {INSTRUCTION} Your response should only include the answer. Do not provide any further explanation. Here are some examples, complete the last one: {INPUT_LABEL}: {ICL_INPUT_1} {OUTPUT_LABEL}: {ICL_OUTPUT_1} {INPUT_LABEL}: {ICL_INPUT_2} {OUTPUT_LABEL}: {ICL_OUTPUT_2} {INPUT_LABEL}: {TEST_INPUT} {OUTPUT_LABEL}: <|assistant|>You can use a similar structure to leverage the model's training. Simply replace the placeholder variables in the prompt template.

Table 3a: Classification template placeholder variables Placeholder variable Description Examples {INSTRUCTION}Description of the task. Include a list of the classes that you want the model to assign to the input. For each product review, indicate whether the review is Positive or Negative. {INPUT_LABEL}Short label for the text to be classified. Input,Customer review,Feedback,Comment{OUTPUT_LABEL}Short label that represents the classification value. Class{ICL_INPUT_N}Optional. Examples of input text to be classified. Add examples when you want to use a few-shot prompt to support in-context learning. The service representative did not listen to a word I said. It was a waste of my time.{ICL_OUTPUT_N}Example outputs with class labels assigned to the corresponding input text examples. Positive,Negative

Classification prompt example

The following prompt classifies feedback that customers share about support center personnel. The following model parameters are specified:

- Stop sequence:

<|endoftext|> - Max tokens: 3

<|system|>

You are an AI language model developed by IBM Research. You are a cautious assistant. You carefully follow instructions. You are helpful and harmless and you follow ethical guidelines and promote positive behavior.

<|user|>

For each feedback, specify whether the content is Positive or Negative. Your response should only include the answer. Do not provide any further explanation.

Here are some examples, complete the last one:

Feedback:

Carol, the service rep was so helpful. She answered all of my questions and explained things beautifully.

Class:

Positive

Feedback:

The service representative did not listen to a word I said. It was a waste of my time.

Class:

Negative

Feedback:

Carlo was so helpful and pleasant. He was able to solve a problem that I've been having with my software for weeks now.

Class:

<|assistant|>

The output that is generated by the granite-7b-lab foundation model when this prompt is submitted is Positive.

Conversing with granite-7b-lab

To get the best results when chatting with the granite-7b-lab foundation model, first follow these recommendations and then experiment to get the results that you want.

The following table lists the recommended model parameters for prompting the granite-7b-lab foundation model for a conversational task.

| Parameter | Recommended value or range | Explanation |

|---|---|---|

| Decoding | Sampling | Sampling decoding generates more creative text, which helps to add interest and personality to responses from the chatbot. However, it can also lead to unpredictable output. You can control the degree of creativity with the next set of model parameters. |

| Top P: 0.85 Top K: 50 Temperature: 0.7 |

These sampling decoding parameters all work together. The model selects a subset of tokens from which to choose the token to include in the output. The subset includes the 50 most-probable tokens (Top K) or the tokens that, when their probability scores are summed, reach a total score of 0.85 (Top P). The relatively low temperature value of 0.7 amplifies the difference in token scores. As a result the tokens that make the cut are typically the most probable tokens. • To increase the creativity and diversity of responses, increase the temperature value. • If the model hallucinates, lower the temperature value. |

|

| Repetition penalty | 1.05 | Set the penalty to this low value to prevent the chatbot from sounding robotic by repeating words or phrases. |

| Random seed | – | Only specify a value for this setting if you are testing something and want to remove randomness as a factor from the test. For example, if you want to change the temperature to see how that affects the output, submit the same input repeatedly and change only the temperature value each time. Also specify a number, such as 5, as the random seed each time to eliminate random token choices from also affecting the model output. The number itself doesn't

matter, as long as you specify the same number each time. |

| Stop sequence | <|endoftext|> | Without the <|endoftext|> stop sequence, the model might add more explanatory information than is necessary. |

| Max tokens | 900 | The maximum context window length for the granite-7b-lab foundation model, which includes both input and output tokens, is 8192. For more information about tokens, see Tokens and tokenization. With each follow-up question, the conversation history is included as part of the model prompt. The granite-7b-lab foundation model can typically sustain a conversation for up to 5 turns or until the input reaches 4,000 tokens in length. |

For more information about the model parameters, see Model parameters for prompting.

To prompt the granite-7b-lab foundation model for a chat task, try these steps:

-

From the Prompt Lab in chat mode, choose the granite-7b-lab foundation model.

Chat mode has default prompt parameter values that are optimized for conversational exchanges, including a higher Max tokens value.

-

From the Model parameters panel, apply the recommended model parameter values from Table 2.

-

In chat mode, you can submit user input without formatting the input text.

Chat mode applies system prompt text for you. You can click the text icon

to see how your text is formatted.

-

To submit the same prompt in freeform mode, you must set up the system prompt.

Add instructions. For example, the following instruction text was used to train the model, and therefore is familiar to the model.

You are an AI language model developed by IBM Research. You are a cautious assistant. You carefully follow instructions. You are helpful and harmless and you follow ethical guidelines and promote positive behavior.

To copy the recommended system prompt text, click the Copy to clipboard icon from the following code snippet.

You are an AI language model developed by IBM Research. You are a cautious assistant. You carefully follow instructions. You are helpful and harmless and you follow ethical guidelines and promote positive behavior.Start a conversation.

The optimal structure for a prompt that is used for a chat task follows. The prompt includes syntax that identifies the following segments of the prompt:

<|system|>: Identifies the instruction, which is also known as the system prompt for the foundation model.<|user|>: The query text to be answered.<|assistant|>: A cue at the end of the prompt that indicates that a generated answer is expected.

When you submit prompts from the Prompt Lab in freeform mode, use the expected prompt format.

<|system|> You are an AI language model developed by IBM Research. You are a cautious assistant. You carefully follow instructions. You are helpful and harmless and you follow ethical guidelines and promote positive behavior. <|user|> {PROMPT} <|assistant|>For example:

<|system|> You are an AI language model developed by IBM Research. You are a cautious assistant. You carefully follow instructions. You are helpful and harmless and you follow ethical guidelines and promote positive behavior. <|user|> What is the capital of Massachusetts? <|assistant|> -

Ask follow-up questions to keep the conversation going.

The optimal structure for a prompt that is used for a chat with multiple dialog turns follows. If you submit prompts from the Prompt Lab in freeform mode, use this prompt format.

<|system|> You are an AI language model developed by IBM Research. You are a cautious assistant. You carefully follow instructions. You are helpful and harmless and you follow ethical guidelines and promote positive behavior. <|user|> {ROUND1_PROMPT} <|assistant|> {MODEL_RESPONSE} <|user|> {ROUND2_PROMPT} <|assistant|>For example:

<|system|> You are an AI language model developed by IBM Research. You are a cautious assistant. You carefully follow instructions. You are helpful and harmless and you follow ethical guidelines and promote positive behavior. <|user|> What is the capital of Massachusetts? <|assistant|> The capital of Massachusetts is Boston. Boston is not only the largest city in Massachusetts but also one of the oldest cities in the United States, with a rich history and vibrant culture. It is home to numerous universities, hospitals, and technology companies, making it a significant center for education, biotechnology, finance, and tourism. If you have any specific questions about Boston or Massachusetts, feel free to ask! <|user|> What US cities are older? <|assistant|> There are several cities in the United States that are older than Boston and even older than the country itself! Some of these historical gems include: 1. St. Augustine, Florida: Established in 1565, St. Augustine is the oldest continuously inhabited European-established settlement within the borders of the contiguous United States. 2. Jamestown, Virginia: Founded in 1607, Jamestown was the first permanent English settlement in North America. 3. Plymouth, Massachusetts: Established in 1620, Plymouth ...

Returning factual answers with the RAG pattern

To guide the granite-7b-lab foundation model to return factual answers, use the retrieval-augmented generation pattern. Retrieval-augmented generation grounds the input that you submit to the model with factual information about the topic to be discussed. For more information, see Retrieval-augmented generation (RAG).

When you want to return factual answers from the granite-7b-lab foundation model, follow these recommendations.

The following table lists the recommended model parameters for prompting the granite-7b-lab foundation model for a retrieval-augmented generation task.

| Parameter | Recommended value or range | Explanation |

|---|---|---|

| Decoding | Greedy | Greedy decoding chooses tokens from only the most-probable options, which is best when you want factual answers. |

| Repetition penalty | 1 | Use the lowest value. Repetition is acceptable when the goal is factual answers. |

| Max tokens | 500 | The model can answer the question as completely as possible. Remember that the maximum context window length for the granite-7b-lab foundation model, which includes both input and output tokens, is 8192. Keep your input, including the document that you add to ground the prompt, within that limit. For more information about tokens, see Tokens and tokenization. |

| Stopping criteria | <|endoftext|> | A helpful feature of the granite-7b-lab foundation model is the inclusion of a special token that is named <|endoftext|> at the end of each response. When some generative models return a response to the input in fewer tokens than the maximum number allowed, they can repeat patterns from the input. This model prevents such repetition by incorporating a reliable stop sequence for the prompt. |

For more information about the model parameters, see Model parameters for prompting.

To prompt the granite-7b-lab foundation model for a retrieval-augmented generation task, try these steps:

-

Find reliable resources with factual information about the topic that you want the model to discuss and that you have permission to use. Copy an excerpt of the document or documents to a text editor or other tool where you can access it later.

For example, the resource might be product information from your own company website or product documentation.

-

From the Prompt Lab, open freeform mode so that you can structure your prompts. Choose the granite-7b-lab foundation model.

-

From the Model parameters panel, set the recommended model parameters from Table 3.

-

In your prompt, clearly define the system prompt, user input, and where the model's output should go.

For example, the following prompt structure can help the granite-7b-lab foundation model to return relevant information.

<|system|> You are an AI language model developed by IBM Research. You are a cautious assistant. You carefully follow instructions. You are helpful and harmless and you follow ethical guidelines and promote positive behavior. <|user|> You are an AI language model designed to function as a specialized Retrieval Augmented Generation (RAG) assistant. When generating responses, prioritize correctness, i.e., ensure that your response is grounded in context and user query. Always make sure that your response is relevant to the question. Answer Length: {ANSWER_LENGTH} [Document] {DOCUMENT1_TITLE} {DOCUMENT1_CONTENT} [End] [Document] {DOCUMENT2_TITLE} {DOCUMENT2_CONTENT} [End] [Document] {DOCUMENT3_TITLE} {DOCUMENT3_CONTENT} [End] {QUERY} <|assistant|>Note: The start and end of the document content is denoted by the special tags

[Document]and[End]. Use a similar syntax if you want to add special tags that identify content types or subsection headers in your prompts. When the granite-7b-lab foundation model was created, it was trained to handle the following special tags:<|system|>: Identifies the instruction, which is also known as the system prompt for the foundation model.<|user|>: The query text to be answered.<|assistant|>: A cue at the end of the prompt that indicates that a generated answer is expected.

Do not use the same

<|tagname|>syntax for your custom tags or you might confuse the model. -

If you do copy this prompt template, after you paste it into the Prompt Lab editor, replace the placeholder variables.

Table 4: RAG template placeholder variables Placeholder variable Description Examples {ANSWER_LENGTH}Optional. Defines the expected response length for the answer. Options include (from shortest to longest answers): single word,concise,narrative{DOCUMENTn_TITLE}Title of the document from which the excerpt with factual information is taken. You can include content from more than one document. Product Brochure {DOCUMENTn_CONTENT}Text excerpt with the factual information that you want the model to be able to discuss knowledgeably. Text from a marketing brochure, product documentation, company website, or other trusted resource. {QUERY}Question to be answered factually. A question about the topic that is discussed in the document. Tip: Alternatively, you can define and use a prompt variable for the document so that the prompt can be reused and the content can be replaced dynamically each time. For more information, see Building reusable prompts.

Retrieval-augmented generation prompt example

The following prompt uses the granite-7b-lab foundation model to answer questions about prompt tuning.

Note: The document content is taken from the Methods for tuning foundation models topic.

<|system|>

You are an AI language model developed by IBM Research. You are a cautious assistant. You carefully follow instructions. You are helpful and harmless and you follow ethical guidelines and promote positive behavior.

<|user|>

You are an AI language model designed to function as a specialized Retrieval Augmented Generation (RAG) assistant. When generating responses, prioritize correctness, i.e., ensure that your response is grounded in context and user query. Always make sure that your response is relevant to the question.

Answer length: concise

[Document]

How prompt-tuning works

Foundation models are sensitive to the input that you give them. Your input, or how you prompt the model, can introduce context that the model will use to tailor its generated output. Prompt engineering to find the right prompt often works well. However, it can be time-consuming, error-prone, and its effectiveness can be restricted by the context window length that is allowed by the underlying model.

Prompt-tuning a model in the Tuning Studio applies machine learning to the task of prompt engineering. Instead of adding words to the input itself, prompt-tuning is a method for finding a sequence of values that, when added as a prefix to the input text, improve the model's ability to generate the output you want. This sequence of values is called a prompt vector.

Normally, words in the prompt are vectorized by the model. Vectorization is the process of converting text to tokens, and then to numbers defined by the model's tokenizer to identify the tokens. Lastly, the token IDs are encoded, meaning they are converted into a vector representation, which is the input format that is expected by the embedding layer of the model. Prompt-tuning bypasses the model's text-vectorization process and instead crafts a prompt vector directly. This changeable prompt vector is concatenated to the vectorized input text and the two are passed as one input to the embedding layer of the model. Values from this crafted prompt vector affect the word embedding weights that are set by the model and influence the words that the model chooses to add to the output.

To find the best values for the prompt vector, you run a tuning experiment. You demonstrate the type of output that you want for a corresponding input by providing the model with input and output example pairs in training data. With each training run of the experiment, the generated output is compared to the training data output. Based on what it learns from differences between the two, the experiment adjusts the values in the prompt vector. After many runs through the training data, the model finds the prompt vector that works best.

[End]

How does prompt tuning work?

<|assistant|>

The granite-7b-lab foundation model generates an accurate summary of how prompt tuning works that is based on information from the document content that was provided with the input.

The output looks something like this:

Prompt tuning is a method for improving a model's ability to generate specific outputs by adjusting a sequence of values called a prompt vector. Instead of directly adding words to the input, prompt tuning crafts a prompt vector that directly influences the word embedding weights set by the model. This is achieved by vectorizing input text and encoding token IDs into a vector representation, which is then concatenated with the crafted prompt vector and passed as one input to the model's embedding layer. The experiment adjusts the values in the prompt vector based on the differences between the generated output and the desired output in training data examples. Over many runs through the training data, the model finds the prompt vector that works best.

Learn more

To learn more about the granite-7b-lab foundation model, read the following resources:

Parent topic: IBM foundation models