Last updated: Dec 18, 2024

After a custom foundation model is deployed, use the capabilities of watsonx.ai to prompt the model.

Note: Only members of the project or space where the custom foundation model is deployed can prompt it. The model is not available to users of other projects or spaces.

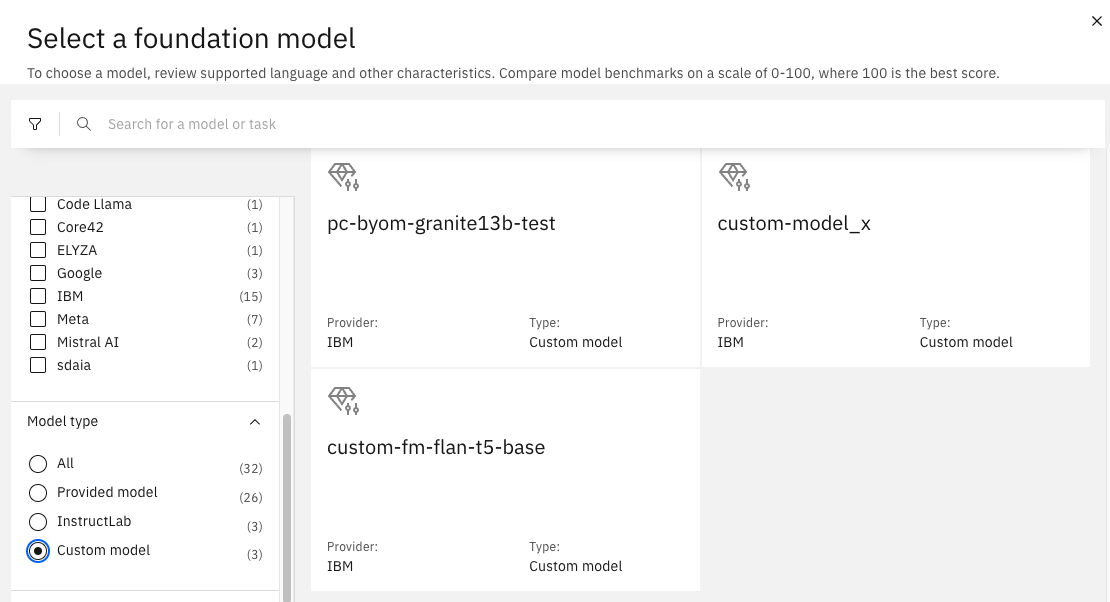

In Prompt Lab, find your custom model in the list of available foundation models. You can then work with the model as you do with foundation models that are provided with watsonx.ai. The simplest way to find your deployed models is to use the filter:

After selecting the model, you can:

- Use the Prompt Lab to create prompts and review prompts for your custom foundation model

Prompting a custom model by using the API

Refer to these examples to code a prompt for the custom foundation model:

curl -X POST "https://<your cloud hostname>/ml/v1/deployments/<your deployment ID>/text/generation?version=2024-01-29" \

-H "Authorization: Bearer $TOKEN" \

-H "content-type: application/json" \

--data '{

"input": "Hello, what is your name",

"parameters": {

"max_new_tokens": 200,

"min_new_tokens": 20

}

}'

curl -X POST "https://<your cloud hostname>/ml/v1/deployments/<your deployment ID>/text/generation_stream?version=2024-01-29" \

-H "Authorization: Bearer $TOKEN" \

-H "content-type: application/json" \

--data '{

"input": "Hello, what is your name",

"parameters": {

"max_new_tokens": 200,

"min_new_tokens": 20

}

}'

Parent topic: Deploying custom foundation models

Was the topic helpful?

0/1000