After you upload your custom foundation model to cloud object storage, create a connection to the model and a corresponding model asset. Use the connection to create a model asset in a project or space.

To create a model asset, add a connection to the model and then create a model asset. If you want to first test your custom foundation model in a project (for example by evaluating it in a Jupyter notebook), add your custom foundation model asset to a project and then promote it to a space.

After you add the model asset, you can deploy it and use Prompt Lab to inference it.

- If you upload your model to remote cloud storage, you must create a connection that is based on your personal credentials. Only connections that use personal credentials are allowed with remote cloud storage. As a result, other users of the same deployment space do not get access to the model content but are allowed to do inference on the model deployments. Create the connection by using your access key and your secret access key. For information on how to enable personal credentials for your account, see Account settings.

Before you begin

You must enable task credentials to be able to deploy a custom foundation model. For more information, see Adding task credentials.

Adding a connection to your model

You can add a connection to your model from either a deployment space or a project. To add a connection programmatically, see Creating a connection by using the Python client or Creating a connection by using the API.

To use the watsonx.ai API, you need a bearer token. For more information, see Credentials for programmatic access.

Adding a connection to the model from your deployment space

To add a connection to the model from your deployment space:

- Go to the Assets tab and click Import assets.

- Select Data assets and then follow the steps that show on the screen.

For Credentials, select Access key and Secret access key. If you select any other option your deployment won't work.

Adding a connection to the model from your project

To add a connection to the model from your project:

- Go to the Assets tab and click New asset.

- select Connect to a data source and then follow the steps that show on the screen.

For Credentials, select Access key and Secret access key. If you select any other option your deployment won't work.

For detailed information on how to create specific types of connections, see Connectors.

Creating a model asset

To create a custom foundation model asset:

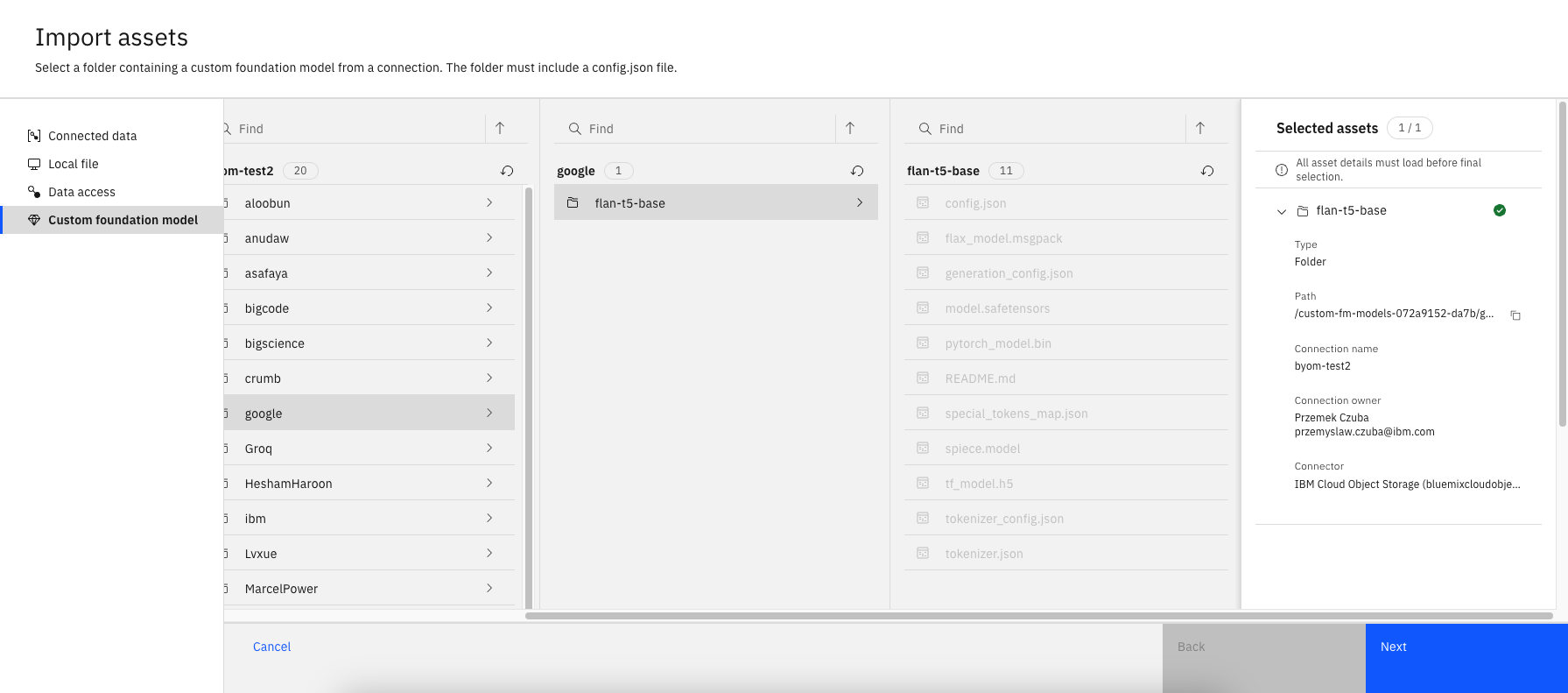

-

In your deployment space or your project, go to Assets and then click Import assets.

-

Select Custom foundation model.

-

Select the connection to the cloud storage where the model is located.

-

Select the folder that contains your model.

-

Enter the required information. If you don't submit any entries for model parameters, default values are used.

-

Click Import.

For information on available model parameters, see Global parameters for custom foundation models.

Creating a custom foundation model asset programmatically

To use the watsonx.ai API, you need a bearer token. For more information, see Credentials for programmatic access.

For information on available model parameters, see Global parameters for custom foundation models. If you don't submit any entries for model parameters, default values are used.

See example code for adding a model asset to a deployment space:

curl -X POST "https://<your cloud hostname>/ml/v4/models?version=2024-01-29" \

-H "Authorization: Bearer $TOKEN" \

-H "content-type: application/json" \

--data '{

"type": "custom_foundation_model_1.0",

"framework": "custom_foundation_model",

"version": "1.0",

"name": "<asset name>",

"software_spec": {

"name": "<name of software specification>"

},

"space_id": "<your space ID>",

"foundation_model": {

"model_id": "<model ID>",

"parameters": [

{

"name": "dtype",

"default": "float16",

"type": "string",

"display_name": "Data Type",

"options": ["float16","bfloat16"]

},

{

"name": "max_batch_size",

"default": 256,

"type": "number",

"display_name": "Max Batch Size"

}],

"model_location": {

"type": "connection_asset",

"connection": {

"id": "<your connection ID>"

},

"location": {

"bucket": "<bucket where the model is located>",

"file_path": "<subpath to model files, if required>"

}

}

}

}'

See example code for adding a model asset to a project:

curl -X POST "https://<your cloud hostname>/ml/v4/models?version=2024-01-29" \

-H "Authorization: Bearer $TOKEN" \

-H "content-type: application/json" \

--data '{

"type": "custom_foundation_model_1.0",

"framework": "custom_foundation_model",

"version": "1.0",

"name": "<asset name>",

"software_spec": {

"name": "<name of software specification>"

},

"project_id": "<your project ID>",

"foundation_model": {

"model_id": "<model ID>",

"parameters": [

{

"name": "dtype",

"default": "float16",

"type": "string",

"display_name": "Data Type",

"options": ["float16","bfloat16"]

},

{

"name": "max_batch_size",

"default": 256,

"type": "number",

"display_name": "Max Batch Size"

}],

"model_location": {

"type": "connection_asset",

"connection": {

"id": "<your connection ID>"

},

"location": {

"bucket": "<bucket where the model is located>",

"file_path": "<subpath to model files, if required>"

}

}

}

}'

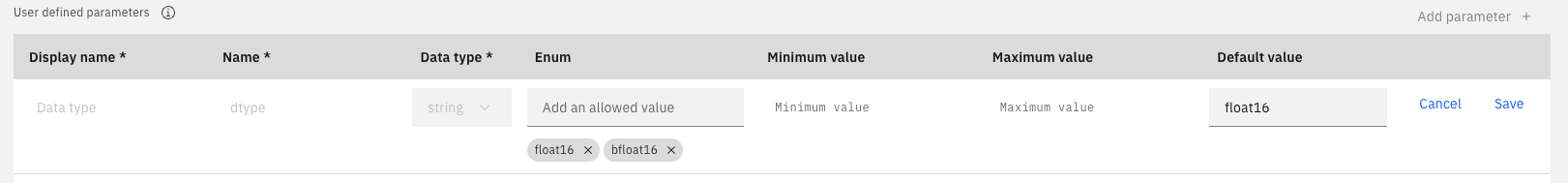

Global parameters for custom foundation models

You can use global parameters to deploy your custom foundation models. Set the value of your base model parameter within the range that is specified in the following table. If you don't do that, your deployment might fail and inferencing will not be possible.

| Parameter | Type | Range of values | Default value | Description |

|---|---|---|---|---|

dtype |

String | float16, bfloat16 |

float16 |

Use this parameter to specify the data type for your model. |

max_batch_size |

Number | max_batch_size >= 1 |

256 | Use this parameter to specify the maximum batch size for your model. |

max_concurrent_requests |

Number | max_concurrent_requests >= 1 and max_concurrent_requests >= max_batch_size |

1024 | Use this parameter to specify the maximum number of concurrent requests that can be made to your model. This parameter is not available to deployments that use the watsonx-cfm-caikit-1.1 software specification. |

max_new_tokens |

Number | max_new_tokens >= 20 |

2047 | Use this parameter to specify the maximum number of tokens that your model generate for an inference request. |

max_sequence_length |

Number | max_sequence_length >= 20 and max_sequence_length > max_new_tokens |

2048 | Use this parameter to specify the maximum sequence length for your model. |

Next steps

Deploying a custom foundation model

Parent topic: Deploying a custom foundation model