AI services provide online inferencing capabilities, which you can use for real-time inferencing on new data. For use cases that require batch scoring, you can run a batch deployment job to test your AI service deployment.

Methods for testing AI service deployments

AI services provide online inferencing capabilities, which you can use for real-time inferencing on new data. This inferencing capability is useful for applications that require real-time predictions, such as chatbots, recommendation systems, and fraud detection systems.

To test AI services for online inferencing, you can use the watsonx.ai user interface, Python client library, or REST API. For more information, see Inferencing AI service deployments for online scoring.

To test AI services for inferencing streaming applications, you can use the watsonx.ai Python client library or REST API. For more information, see Inferencing AI service deployments for streaming applications.

For use cases that require batch scoring, create and run a batch deployment job. You can provide any valid JSON input as a payload to the REST API endpoint for inferencing. For more information, see Testing batch deployments for AI services.

Testing AI service deployments for online scoring

You can inference your AI service online deployment by using the watsonx.ai user interface, REST API or watsonx.ai Python client library.

Inferencing with the user interface

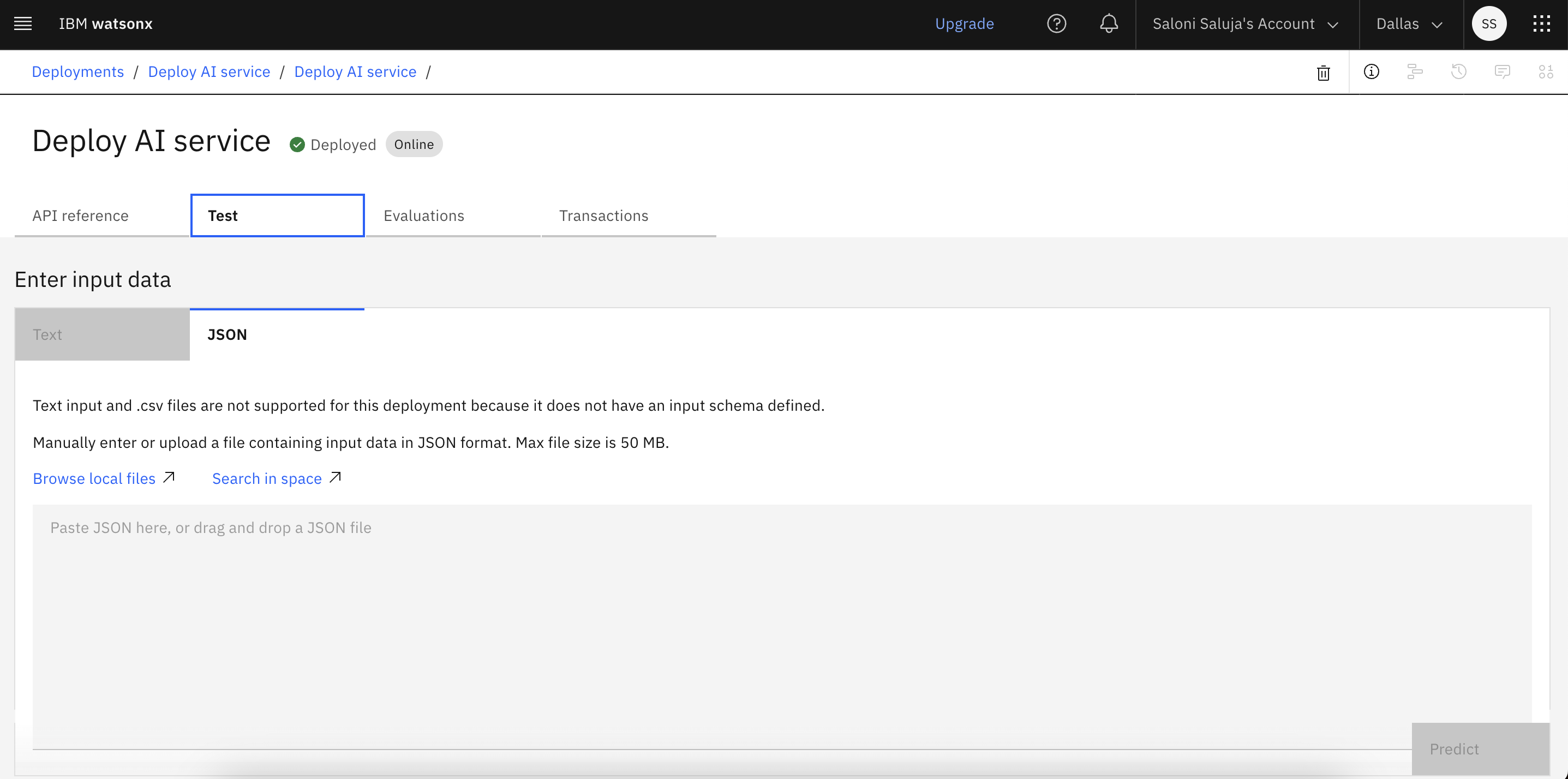

You can use the user interface for inferencing your AI services by providing any valid JSON input payload that aligns with your model schema. Follow these steps for inferencing your AI service deployment:

-

In your deployment space or your project, open the Deployments tab and click the deployment name.

-

Click the Test tab to input prompt text and get a response from the deployed asset.

-

Enter test data in JSON format to generate output in JSON format.

Restriction:You cannot enter input data in the text format to test your AI service deployment. You must use the JSON format for testing.

Inferencing with Python client library

Use the run_ai_service function to run an AI service by providing a scoring payload:

ai_service_payload = {"query": "testing ai_service generate function"}

client.deployments.run_ai_service(deployment_id, ai_service_payload)

For more information, see watsonx.ai Python client library documentation for running an AI service asset.

Inferencing with REST API

Use the /ml/v4/deployments/{id_or_name}/ai_service REST endpoint for inferencing AI services for online scoring (AI services that include the generate() function):

# Any valid json is accepted in POST request to /ml/v4/deployments/{id_or_name}/ai_service

payload = {

"mode": "json-custom-header",

't1': 12345 ,

't2': ["hello", "wml"],

't3': {

"abc": "def",

"ghi": "jkl"

}

}

response = requests.post(

f'{HOST}/ml/v4/deployments/{dep_id}/ai_service?version={VERSION}',

headers=headers,

verify=False,

json=payload

)

print(f'POST {HOST}/ml/v4/deployments/{dep_id}/ai_service?version={VERSION}', response.status_code)

from json.decoder import JSONDecodeError

try:

print(json.dumps(response.json(), indent=2))

except JSONDecodeError:

print(f"response text >>\n{response.text}")

print(f"\nThe user customized content-type >> '{response.headers['Content-Type']}'")

Testing AI service deployments for streaming applications

You can inference your AI service online deployment for streaming applications by using the watsonx.ai REST API or watsonx.ai Python client library.

Inferencing with Python client library

Use the run_ai_service_stream function to run an AI service for streaming applications by providing a scoring payload.

ai_service_payload = {"sse": ["Hello", "WatsonX", "!"]}

for data in client.deployments.run_ai_service_stream(deployment_id, ai_service_payload):

print(data)

For more information, see watsonx.ai Python client library documentation for running an AI service asset for streaming.

Inferencing with REST API

Use the /ml/v4/deployments/{id_or_name}/ai_service_stream REST endpoint for inferencing AI services for streaming application (AI services that include the generate_stream() function):

TOKEN=f'{client._get_icptoken()}'

%%writefile payload.json

{

"sse": ["Hello" ,"WatsonX", "!"]

}

!curl -N \

-H "Content-Type: application/json" -H "Authorization: Bearer {TOKEN}" \

{HOST}'/ml/v4/deployments/'{dep_id}'/ai_service_stream?version='{VERSION} \

--data @payload.json

Output

id: 1 event: message data: Hello

id: 2 event: message data: WatsonX

id: 3 event: message data: !

id: 4 event: eos

Testing batch deployments for AI services

You can use the watsonx.ai user interface, Python client library or REST API to create and run a batch deployment job for if you created a batch deployment for your AI service (AI service asset that contains the generate_batch() function).

Prerequisites

You must set up your task credentials to run a batch deployment job for your AI service. For more information, see Managing task credentials.

Testing with user interface

To learn more about creating and running a batch deployment job for your AI service from the deployment space user interface, see Creating jobs in deployment spaces.

Testing with Python client library

Follow these steps to test your batch deployment by creating and running a job:

-

Create a job to test your AI service batch deployment:

batch_reference_payload = { "input_data_references": [ { "type": "connection_asset", "connection": {"id": "2d07a6b4-8fa9-43ab-91c8-befcd9dab8d2"}, "location": { "bucket": "wml-v4-fvt-batch-pytorch-connection-input", "file_name": "testing-123", }, } ], "output_data_reference": { "type": "data_asset", "location": {"name": "nb-pytorch_output.zip"}, }, } job_details = client.deployments.create_job(deployment_id, batch_reference_payload) job_uid = client.deployments.get_job_uid(job_details) print("The job id:", job_uid) -

Check job status:

## Get job status client.deployments.get_job_status(job_uid)

Learn more

Parent topic: Deploying AI services