Depending on your use case, you can create an online or a batch deployment for your AI services asset from your deployment space. Deploy your AI service by using the watsonx.ai user interface, REST API, or Python client library.

Types of deployments for AI service

Depending on your use case, you can deploy the AI service asset as an online or a batch deployment. Choose the deployment type based on the functions used in the AI service.

- You must create an online deployment for your AI service asset for online scoring (AI service contains the

generate()function) or streaming applications (AI service contains thegenerate_stream()function). - You must create a batch deployment for your AI service asset for batch scoring applications (AI service contains the

generate_batch()function).

Prerequisites

-

You must set up your task credentials for deploying your AI services. For more information, see Adding task credentials.

-

You must promote your AI services asset to your deployment space.

Deploying AI services with the user interface

You can deploy your AI services asset from the user interface of your deployment space.

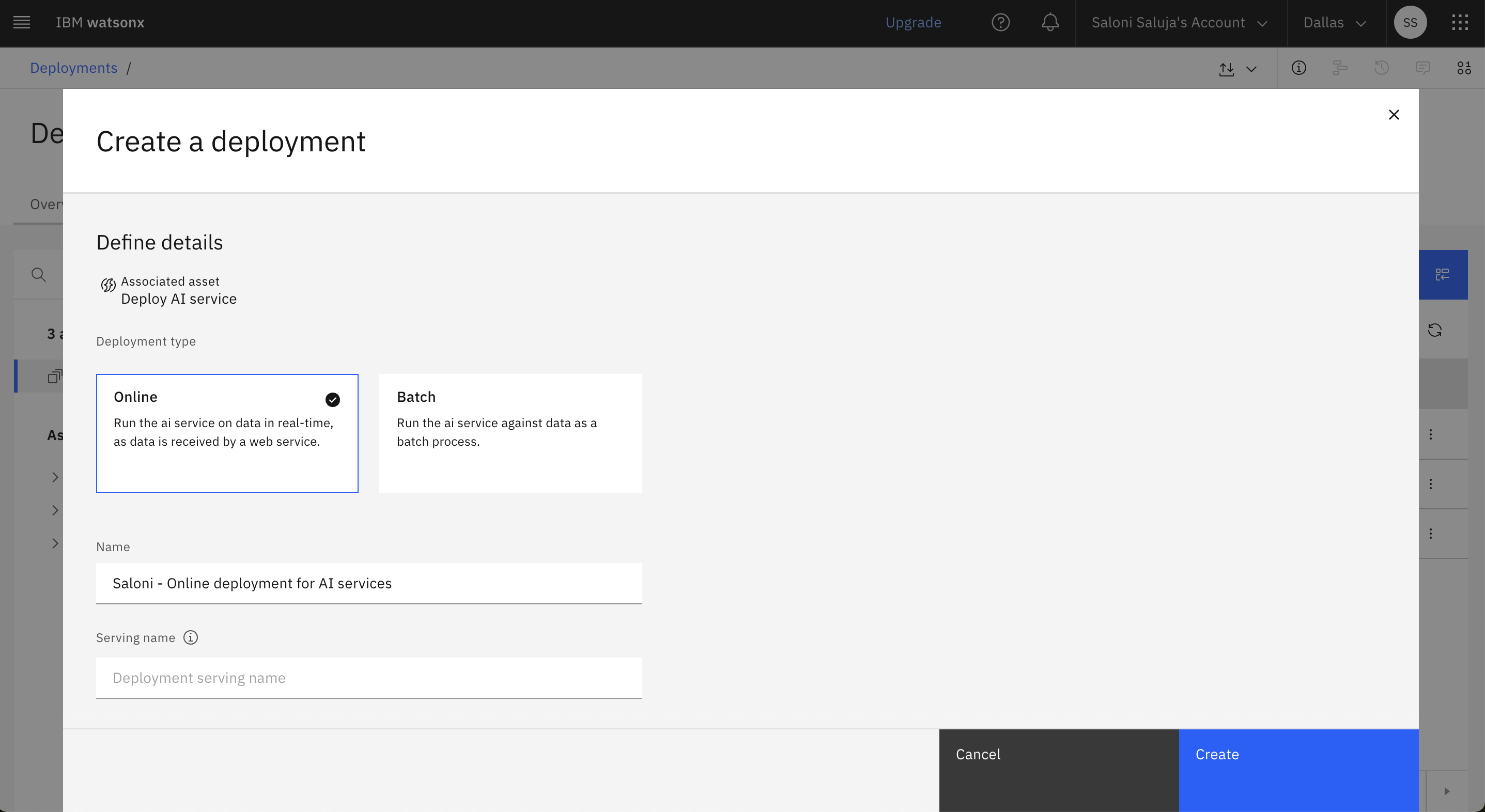

Creating an online deployment for AI services

Follow these steps to create an online deployment for your AI service asset from the deployment space user interface:

-

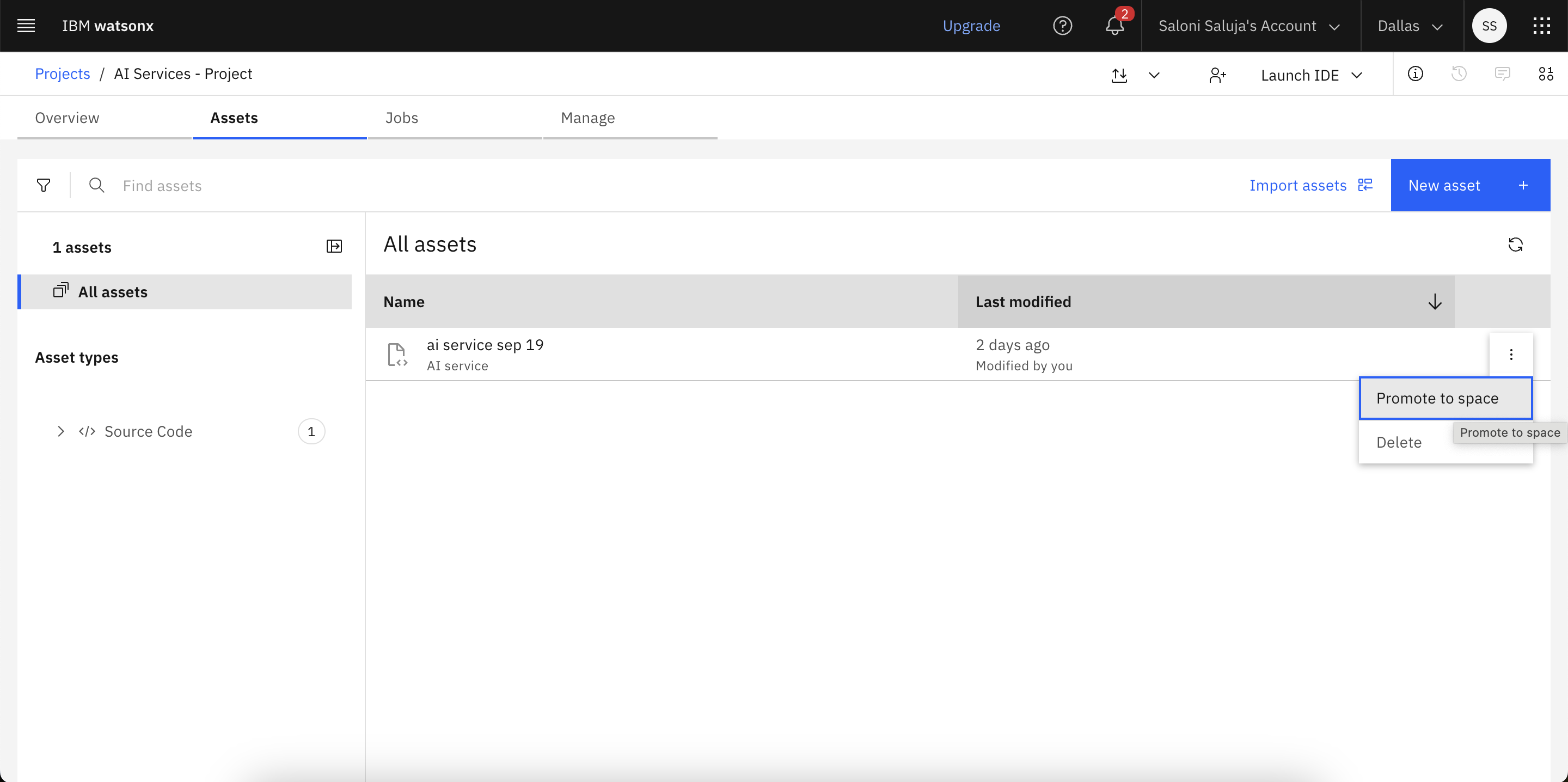

From your deployment space, go th the Assets tab.

-

For your AI service asset in the assets list, click the Menu icon, and select Deploy.

-

Select Online as the deployment type.

-

Enter a name for your deployment and optionally enter a serving name, description, and tags.

-

Click Create.

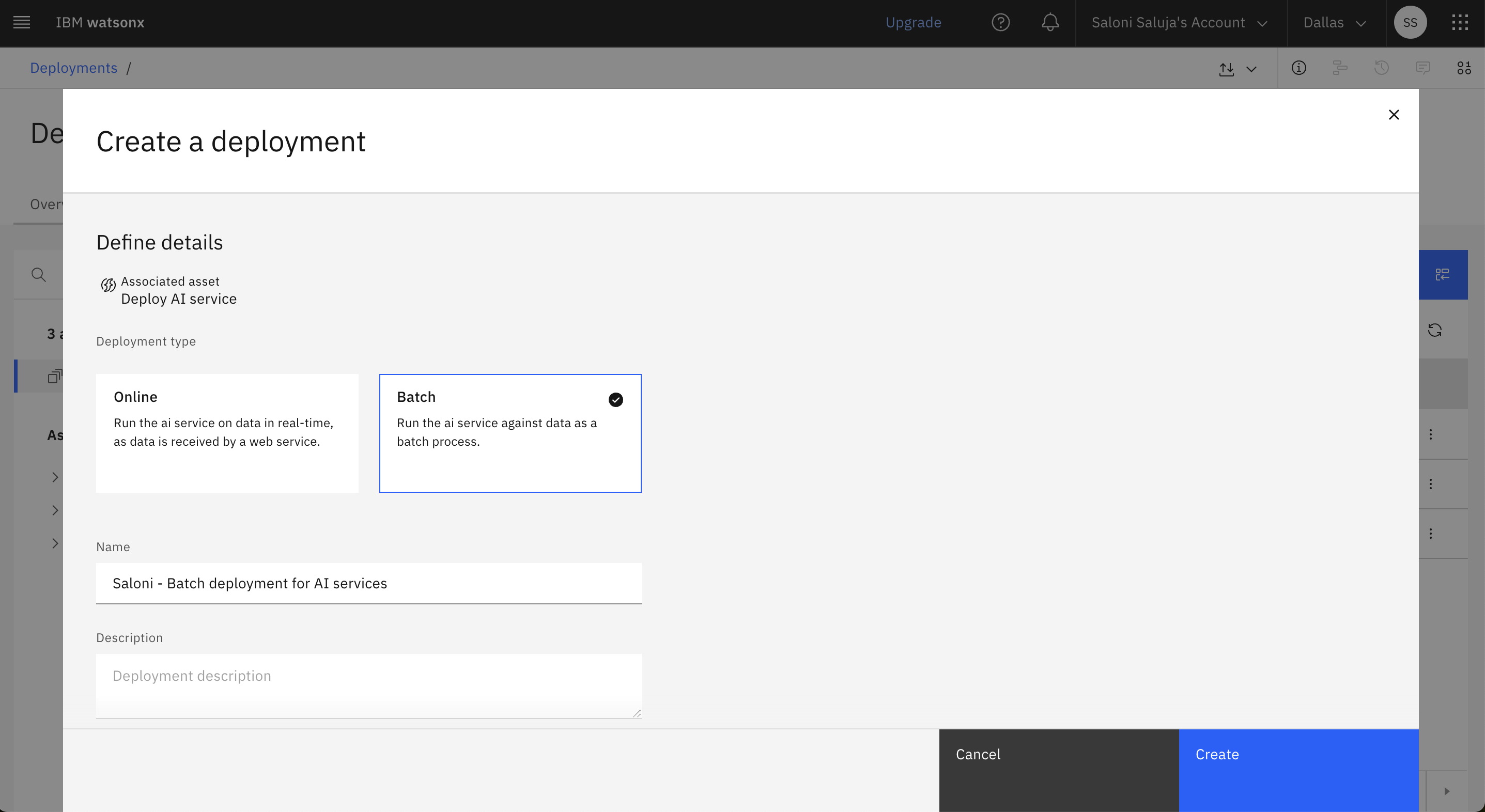

Creating a batch deployment for AI services

Follow these steps to create a batch deployment for your AI service asset from the deployment space user interface:

-

From your deployment space, go th the Assets tab.

-

For your AI service asset in the assets list, click the Menu icon, and select Deploy.

-

Select Batch as the deployment type.

-

Enter a name for your deployment and optionally enter a serving name, description, and tags.

-

Select a hardware specification:

- Extra small: 1 CPU and 4 GB RAM

- Small: 2 CPU and 8 GB RAM

- Medium: 4 CPU and 16 GB RAM

- Large: 8 CPU and 32 GB RAM

- Extra large: 16 CPU and 64 GB RAM

-

Click Create.

Deploying AI services with Python client library

You can create an online or a batch deployment for your AI service asset by using the Python client library.

Creating online deployment

The following example shows how to create an online deployment for your AI service by using the watsonx.ai Python client library:

deployment_details = client.deployments.create(

artifact_id=ai_service_id,

meta_props={

client.deployments.ConfigurationMetaNames.NAME: "ai-service - online test",

client.deployments.ConfigurationMetaNames.ONLINE: {},

client.deployments.ConfigurationMetaNames.HARDWARE_SPEC: {

"id": client.hardware_specifications.get_id_by_name("XS")

},

},

)

deployment_id = client.deployments.get_uid(deployment_details)

print("The deployment id:", deployment_id)

Creating batch deployment

The following example shows how to create a batch deployment for your AI service by using the watsonx.ai Python client library:

deployment_details = client.deployments.create(

artifact_id=ai_service_id,

meta_props={

client.deployments.ConfigurationMetaNames.NAME: f"ai-service - batch",

client.deployments.ConfigurationMetaNames.BATCH: {},

client.deployments.ConfigurationMetaNames.HARDWARE_SPEC: {

"id": client.hardware_specifications.get_id_by_name("XS")

},

},

)

deployment_id = client.deployments.get_uid(deployment_details)

print("The batch deployment id:", deployment_id)

Deploying AI services with REST API

You can use the /ml/v4/deployments watsonx.ai REST API endpoint to create an online or a batch deployment for your AI service asset.

Creating online deployment

The following example shows how to create an online deployment for your AI service by using the REST API:

# POST /ml/v4/deployments

response = requests.post(

f'{HOST}/ml/v4/deployments?version={VERSION}',

headers=headers,

verify=False,

json={

"space_id": space_id,

"name": "genai flow online",

"custom": {

"key1": "value1",

"key2": "value2",

"model": "meta-llama/llama-3-8b-instruct"

},

"asset": {

"id": asset_id

},

"online": {}

}

)

Creating batch deployment

The following example shows how to create a batch deployment for your AI service by using the REST API:

response = requests.post(

f'{HOST}/ml/v4/deployments?version={VERSION}',

headers=headers,

verify=False,

json={

"hardware_spec": {

"id": "........",

"num_nodes": 1

},

"space_id": space_id,

"name": "ai service batch dep",

"custom": {

"key1": "value1",

"key2": "value2",

"model": "meta-llama/llama-3-8b-instruct"

},

"asset": {

"id": asset_id

},

"batch": {}

}

)

print(f'POST {HOST}/ml/v4/deployments?version={VERSION}', response.status_code)

print(json.dumps(response.json(), indent=2))

dep_id = response.json()["metadata"]["id"]

print(f"{dep_id=}")

Learn more

Parent topic: Deploying AI services with direct coding