We've been browsing the model to understand how scoring works. But to evaluate how accurately it works, we need to score some records and compare the responses predicted by the model to the actual results. We're going to score the same records that were used to estimate the model, allowing us to compare the observed and predicted responses.

- To see the scores or predictions, attach the Table node to the model nugget

and then right-click the Table node and select Run. A table will be generated

and added to the Outputs panel. Double-click it to open it.

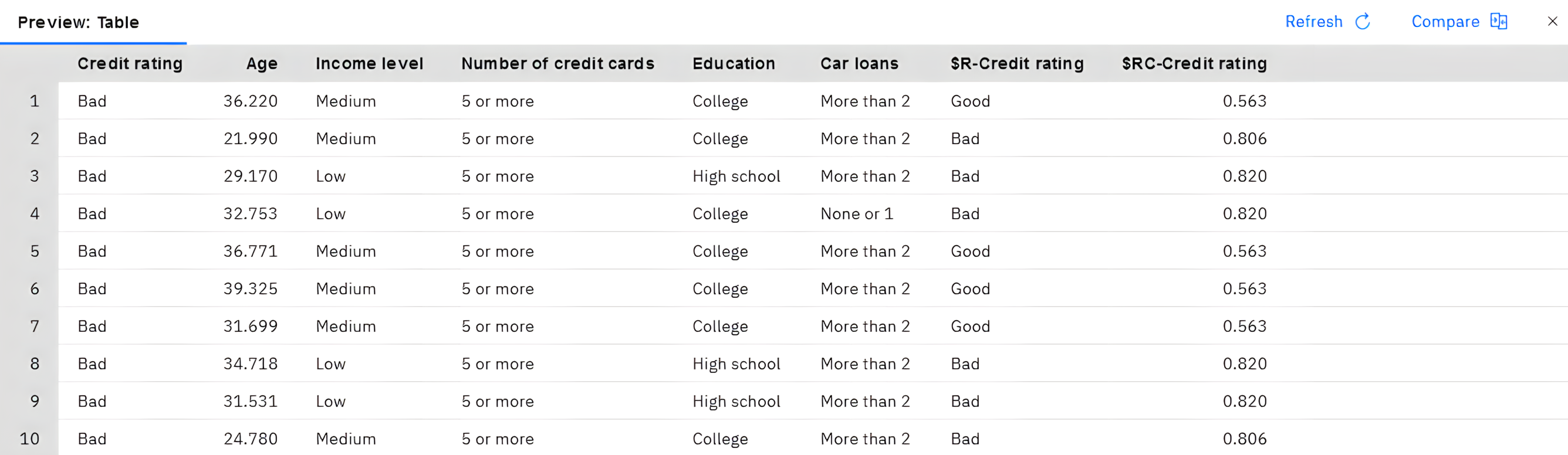

The table displays the predicted scores in a field named

$R-Credit rating, which was created by the model. We can compare these values to the originalCredit ratingfield that contains the actual responses.By convention, the names of the fields generated during scoring are based on the target field, but with a standard prefix. Prefixes

$Gand$GEare generated by the Generalized Linear Model,$Ris the prefix used for the prediction generated by the CHAID model in this case,$RCis for confidence values,$Xis typically generated by using an ensemble, and$XR,$XS, and$XFare used as prefixes in cases where the target field is a Continuous, Categorical, Set, or Flag field, respectively. Different model types use different sets of prefixes. A confidence value is the model's own estimation, on a scale from 0.0 to 1.0, of how accurate each predicted value is.Figure 2. Table showing generated scores and confidence values

As expected, the predicted value matches the actual responses for many records but not all. The reason for this is that each CHAID terminal node has a mix of responses. The prediction matches the most common one, but will be wrong for all the others in that node. (Recall the 18% minority of low-income customers who did not default.)

To avoid this, we could continue splitting the tree into smaller and smaller branches, until every node was 100% pure—all Good or Bad with no mixed responses. But such a model would be extremely complicated and would probably not generalize well to other datasets.

To find out exactly how many predictions are correct, we could read through the table and tally the number of records where the value of the predicted field

$R-Credit ratingmatches the value ofCredit rating. Fortunately, there's a much easier way; we can use an Analysis node, which does this automatically. - Connect the model nugget to the Analysis node.

- Right-click the Analysis node and select Run. An Analysis entry will be added to the Outputs panel. Double-click it to open it.

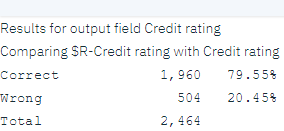

The analysis shows that for 1960 out of 2464 records—over 79%—the value predicted by the model matched the actual response.

This result is limited by the fact that the records being scored are the same ones used to estimate the model. In a real situation, you could use a Partition node to split the data into separate samples for training and evaluation. By using one sample partition to generate the model and another sample to test it, you can get a much better indication of how well it will generalize to other datasets.

The Analysis node allows us to test the model against records for which we already know the actual result. The next stage illustrates how we can use the model to score records for which we don't know the outcome. For example, this might include people who are not currently customers of the bank, but who are prospective targets for a promotional mailing.